🏷️ Tech Stack: Python • Pandas • NumPy • AI-Assisted Development • Data Analysis • Threat Intelligence • Automation • Palo Alto Networks •

Professional Project - Orange Réunion Network Department | 2024-2025

⚠️ Disclaimer: All IP addresses, timestamps, and vulnerability identifiers (CVEs) included in this document have been randomly generated or modified for educational and illustrative purposes only. No confidential files or sensitive data were used.

Project Overview

During my apprenticeship at Orange Réunion’s IP Network Department, I identified a critical operational challenge: the manual analysis of IPS/IDS threat logs was consuming 1-2 hours daily to process only 5-10 attackers, severely limiting our security response capabilities. I conceived and developed an automated Python solution that transforms this time-intensive process into an efficient, scalable threat analysis system.

Business Context: Orange Réunion’s web services infrastructure protection

Challenge: Manual log analysis bottleneck limiting security response

Solution: AI-assisted development of automated threat detection tool

Impact: From hours to minutes for comprehensive threat analysis

📋 TL;DR - Executive Summary

Problem: Manual analysis of Palo Alto IPS/IDS threat logs was consuming 1-2 hours daily to process only 5-10 attackers, creating a critical security bottleneck at Orange Réunion.

Solution: Developed a Python-based automated threat intelligence tool using AI-assisted development methodology, implementing three complementary detection algorithms (CVE diversity, volume analysis, and hybrid intelligence).

Impact: Reduced analysis time from hours to <10 minutes while scaling from 5-10 to 100+ IPs per cycle. Tool now processes comprehensive threat data and generates actionable block lists with complete evidence packages.

Innovation: Applied strategic AI-assisted development approach, focusing on security logic design while leveraging AI for implementation acceleration.

🎯 Business Challenge & Solution

IPS/IDS Context & Limitations

Palo Alto’s IPS/IDS module provides comprehensive threat protection with an extensive CVE database and configurable severity classifications (Critical, High, Medium, Low, Informational). The system automatically blocks attacks based on predefined criteria and maintains temporary IP blocking with configurable durations according to internal security policies.

However, these automated protections have inherent limitations. Sophisticated attackers understand these blocking mechanisms and adapt their strategies accordingly. They return after blocking periods expire, test detection thresholds to understand how to operate “under the noise,” and deliberately design low-profile, persistent campaigns to remain invisible while continuing reconnaissance or targeted attacks.

The core challenge: While automated IPS/IDS systems effectively block obvious threats, advanced persistent threats require deeper log analysis to detect subtle behavioral patterns that automated rules miss. This analysis—whether human, software-based, or AI-assisted—is essential for understanding sophisticated attack campaigns and identifying threats that deliberately evade standard detection thresholds.

This need for deeper analysis translated into a significant operational bottleneck. Our network team was required to manually review thousands of IPS/IDS threat logs daily to identify these sophisticated, under-the-radar attacks that automated systems missed.

The Problem

Imagine being a security guard who must manually review thousands of surveillance alerts every day, deciding which ones represent real threats. This was my daily reality.

Our firewalls were detecting hundreds of potential cyber attacks but manual analysis of daily IPS/IDS logs from Palo Alto firewalls was severely limiting our security operations:

- Time Intensive: 1-2 hours to analyze 5-10 attackers maximum

- Inconsistent Analysis: Human factor introducing variability in threat assessment

- Limited Scalability: Unable to process high-volume attack scenarios

- Documentation Overhead: Manual report generation for management and technical teams

Think of it this way: if a bank security team could only investigate 5-10 suspicious individuals per day out of thousands passing through, most actual threats would go unnoticed.

The Solution

I developed a Python-based system that acts as a sophisticated pattern recognition engine that can simultaneously analyze hundreds of potential threats through different screening algorithms at once.

The solution transforms our security operations by providing:

- Automated Threat Recognition: Analyzing every single potential threat

- Instant Intelligence Reports: Comprehensive analysis delivered in minutes, not hours

- Scalable Processing: Handle massive log volumes without human bottlenecks

- Evidence-Based Decisions: Every recommendation comes with complete justification and proof

🛠️ Technical Architecture & Innovation

Core Technology Stack

- Python 3.11 Programming language chosen to create the multi-Algorithm Engine - Three different “detective approaches” working together to identify threats

- Pandas & NumPy - Libraries chosen for handling large amounts of security events and Processing Data Pipeline into actionable intelligence

🛠️ Technical Architecture

Palo Alto Threat Log Integration

This tool is specifically engineered for Palo Alto Networks threat log CSV exports, leveraging the standardized field structure for immediate deployment in PA-based security infrastructures.

Native CSV Field Processing

# Key Palo Alto CSV fields used by the analysis engine

required_fields = {

'Threat/Content Type': 'vulnerability', # Filter for vulnerability alerts

'Source address': '95.166.2.100', # Attacker IP address

'Threat/Content Name': 'CVE-2023-1234', # Specific vulnerability identifier

'Receive Time': '2025/01/15 14:30:25', # Timestamp for temporal analysis

'Source Country': 'France', # Geographic intelligence

'Severity': 'Critical' # Risk classification

}

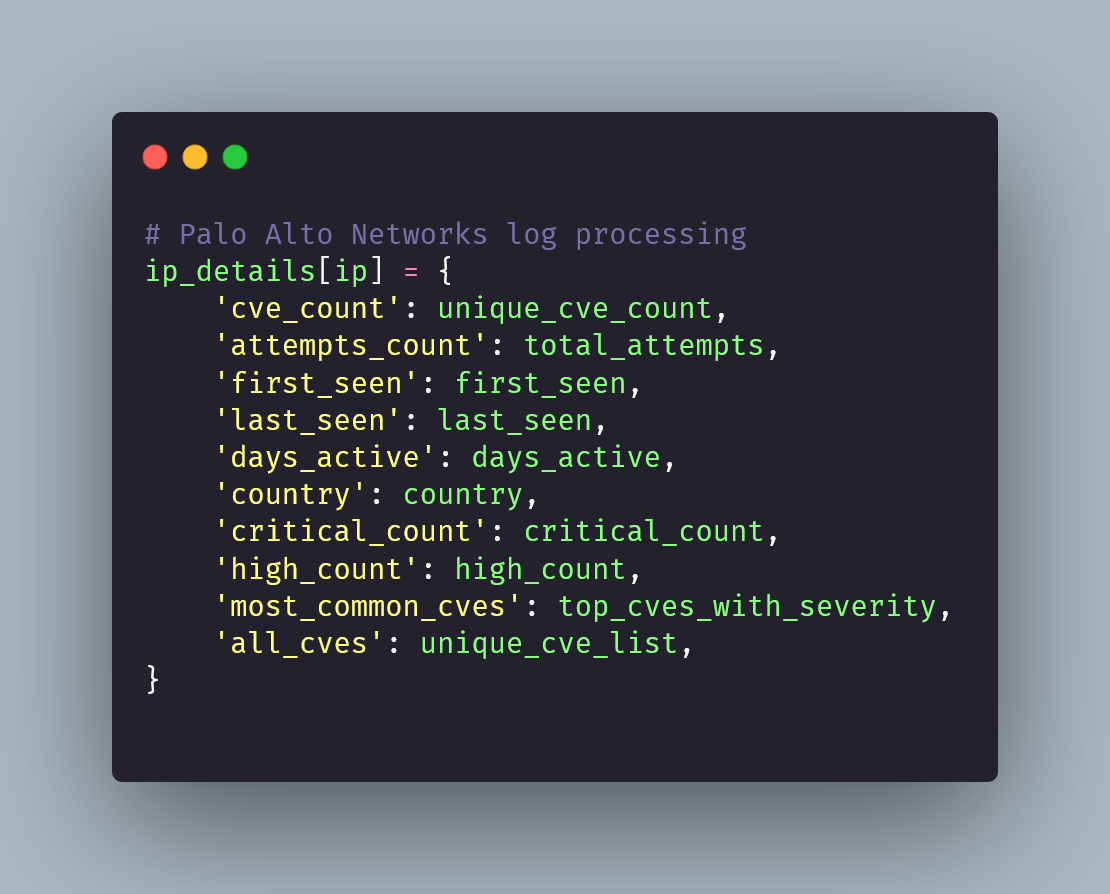

Intelligence Extraction Capabilities

The system transforms raw log entries into actionable intelligence:

- Temporal persistence: Attack duration and frequency patterns

- Geographic risk assessment: Local vs international threat differentiation

- Vulnerability targeting: CVE diversity and exploitation frequency

- Severity-based prioritization: Risk classification and escalation logic

Data Compatibility: Optimized for immediate deployment in PA-based security infrastructures.

AI-Assisted Development Methodology

Rather than spending weeks, maybe months, developping a POC from scratch, I chose a development methodology using AI as a coding partner to accelerate implementation while I maintained control over architecture decisions.

This methodology allowed me to focus on the security logic and business requirements while AI handled the implementation details, dramatically accelerating development without compromising quality.

The final code helps in threat analysis but final security decision-making remains entirely under human expertise.

Development Process:

- Requirements Engineering: I manually analyzed what the security team actually needed and identified the technical constraints

- Architectural Design: I designed the system structure, deciding how data should flow and what components were needed

- AI-Powered Implementation: I used AI to convert my specifications into actual Python code, reviewing and refining each component

- Iterative Validation: Each piece was tested and verified to ensure it met Orange’s enterprise security standards

🔍 Multi-Algorithm Threat Detection Engine

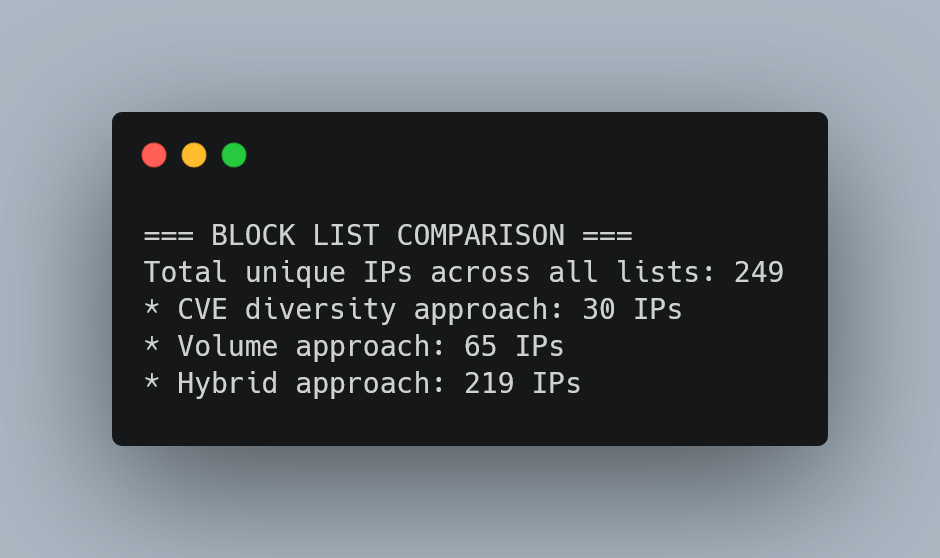

The system implements three distinct analysis approaches that operate independently and generate separate reports and block lists. Each algorithm targets different attack patterns with complementary detection strategies: precision targeting for sophisticated threats, volume-based detection for broad coverage, and hybrid analysis.

Independent Operation: Each approach produces its own block list, intelligence report, and statistical analysis. Security teams can choose to implement one, two, or all three approaches based on their specific requirements and risk tolerance.

Approach 1: CVE Diversity Analysis

Concept: Think of an attacker testing many different types of locks on a building - this behavior indicates systematic reconnaissance rather than random attempts.

Identifies IPs having exploited a large number of unique CVEs over the analyzed period. This approach is particularly effective for detecting sophisticated attackers who use various techniques to explore system vulnerabilities.

Technical Implementation

def analyze_high_cve_ips_total(self, threshold_cve=10, threshold_attempts=50):

# Filter vulnerability entries, excluding TCP floods

vulnerability_data = self.data[self.data['Threat/Content Type'] == 'vulnerability']

# Exclude TCP floods to reduce false positives

non_flood_vulns = vulnerability_data[

~vulnerability_data['Threat/Content Name'].str.contains('flood', case=False, na=False)

]

for ip, group in non_flood_vulns.groupby('Source address'):

# Count unique CVEs attempted by each IP

cve_list = group['Threat/Content Name'].tolist()

unique_cve_list = list(set(cve_list))

unique_cve_count = len(unique_cve_list)

# Classification and metadata extraction for each IP

if unique_cve_count >= threshold_cve:

# Add to CVE-based block list with complete metadata

Detection Logic

Inclusion criteria:

IP with unique_CVE_count ≥ threshold_cve- Explanation: An IP address is added to the block list if it has attempted to exploit at least N different vulnerabilities (CVEs). For example, with default threshold 10, an IP testing 15 different security flaws would be included, while an IP testing only 5 different flaws would not.

Configurable threshold:

--threshold-cve N(default: 10)- Explanation: You can adjust the sensitivity - lower values (e.g., 5) catch more IPs but may include less sophisticated scanners, higher values (e.g., 20) focus only on the most diverse/advanced reconnaissance tools.

Pre-filtering: Excludes TCP flood attacks to reduce false positives

- Explanation: TCP flood attacks generate massive volumes of identical alerts that would skew the CVE diversity count. These are filtered out because they represent different attack types (DoS) rather than vulnerability exploitation.

Metadata extraction: Country, severity distribution, temporal analysis, CVE frequency

- Explanation: For each detected IP, the system collects additional intelligence: where it’s from, how serious the attempted attacks were, when attacks occurred, and which specific vulnerabilities were most targeted. This provides context for security decisions.

Use Cases

- Detection of vulnerability scanners and automated exploitation tools

- Identification of methodical reconnaissance activities

- Risk indicator: An IP targeting numerous different vulnerabilities indicates methodical reconnaissance

Approach 2: Volume Analysis

Concept: Some attackers rely on brute force - making so many attempts that they become impossible to ignore, regardless of sophistication.

Identifies IPs having performed a large number of attack attempts, regardless of CVE diversity. This approach detects brute force attacks, automated scanners, and attacks concentrating numerous attempts on a limited number of vulnerabilities.

Technical Implementation

# Count total exploitation attempts per IP

for ip, group in non_flood_vulns.groupby('Source address'):

cve_list = group['Threat/Content Name'].tolist()

total_attempts = len(cve_list)

# Include all alerts, even repetitive on same CVE

if total_attempts >= threshold_attempts:

# Add to volume-based block list

# Aggregate attempts by IP and extract associated statistics

Detection Logic

Inclusion criteria:

IP with total_attempts ≥ threshold_attempts- Explanation: An IP address is added to the block list if it has made at least N total exploitation attempts, regardless of how many different vulnerabilities were targeted. For example, with default threshold 50, an IP making 100 attempts (even if all targeting the same vulnerability) would be included.

Configurable threshold:

--threshold-attempts N(default: 50)- Explanation: Lower values (e.g., 20) catch more aggressive scanners but may include legitimate security testing, higher values (e.g., 100) focus only on the highest-volume attackers.

Scope: Counts all exploitation attempts, including repetitive targeting of same CVE

- Explanation: Unlike the CVE approach which counts unique vulnerabilities, this counts every single attack attempt. An IP repeatedly hammering the same vulnerability 50 times would be detected here but not in the CVE approach.

Aggregation: Attempts aggregated by IP with statistical extraction

- Explanation: All attack attempts from the same source IP are counted together across the entire analysis period, providing volume-based threat intelligence.

Use Cases

- Detection of brute force attacks and repeated exploitation attempts

- Risk indicator: An IP generating high volume of alerts reveals abnormal and potentially malicious activity

Approach 3: Hybrid Intelligence Analysis

Concept: Not all suspicious activity should be treated equally - context matters. A critical attack from an unknown international source warrants immediate action, while similar activity from a local source needs more evidence.

Intelligently combines multiple criteria (severity, volume, temporal persistence) for advanced and contextual threat analysis. This approach detects attacks that might go unnoticed in traditional approaches while limiting false positives through combinatorial logic.

Dual Operation Modes:

- Standard mode: Applies uniform criteria to all IPs regardless of origin

- Geographic differentiation mode: (

--strict-localflag) Applies stricter criteria to local IPs (France/Réunion/Mayotte) while using standard sensitivity for international sources

Technical Implementation

def analyze_hybrid_threats(self, critical_threshold=1, high_threshold=5,

volume_threshold=100, days_active_threshold=2,

strict_local=False):

# Filter low-risk alerts to reduce false positives

low_risk_alerts = [

"HTTP Unauthorized Error",

"TLS Encrypted Client Hello Extension Detection",

"HTTP WWW-Authentication Failed",

"Non-TLS Traffic On Port 443",

"HTTP2 Protocol Suspicious RST STREAM Frame detection"

]

for ip, data in self.all_ips_details.items():

# Check if it's a local IP (France/Réunion/Mayotte)

is_local = (data['country'] == 'France' or

data['country'] == 'Réunion' or

data['country'] == 'RUnion' or

'france' in str(data['country']).lower() or

'reunion' in str(data['country']).lower() or

'réunion' in str(data['country']).lower() or

'mayotte' in str(data['country']).lower())

Decision Logic

How the detection works: The system evaluates each IP against these 4 conditions sequentially. As soon as one condition is satisfied, the IP is added to the block list. The conditions use AND logic internally (multiple requirements within each rule) but OR logic between rules (only one rule needs to match).

High attacks with activity:

High ≥ threshold AND (attempts ≥ 20 OR days ≥ 2)- Combined rule: High-severity attacks PLUS supporting evidence (either volume OR persistence). An IP needs both the severity level AND at least one activity indicator.

- Why this combination: High-severity exploits alone could be false positives from security scanners, but when combined with significant activity (volume or persistence), it indicates genuine malicious intent rather than accidental discovery.

Volume with persistence:

attempts ≥ threshold AND days ≥ 2- Combined rule: High volume PLUS temporal persistence. Both conditions must be met - high activity that spans multiple days.

- Why this combination: High volume on a single day could be automated scanning or legitimate testing, but sustained high-volume activity across multiple days is a strong indicator of determined, systematic attacks rather than one-off events.

Persistent low-volume:

days ≥ 4 AND attempts ≥ 10 AND (any non-informational severity)- Complex rule: Long persistence PLUS minimal activity PLUS at least one meaningful alert. All three conditions required to catch subtle, long-term threats.

- Why this combination: Advanced attackers often use low-noise, long-duration reconnaissance to avoid detection. The combination of extended persistence with minimal but consistent activity and at least one security alert indicates sophisticated, stealthy campaigns rather than random probing.

Any Critical attack:

Critical ≥ threshold- Simple rule: Immediate concern for critical attacks regardless of other factors.

- Why this works alone: Critical-severity vulnerabilities can lead to complete system compromise. Even a single successful critical exploit represents an unacceptable risk, making additional criteria unnecessary for this threat level.

Geographic Adaptation: When --strict-local is enabled, local IPs (France/Réunion) use stricter thresholds with the same logical structure, while international IPs use standard sensitivity. When disabled, all IPs use the standard criteria regardless of origin.

Use Cases

- Detection of sophisticated and persistent low-noise attacks

- Risk indicator: Combination of risk factors (severity, persistence, diversity) revealing malicious behavior

- Configurable thresholds: All criteria adjustable for environment-specific tuning

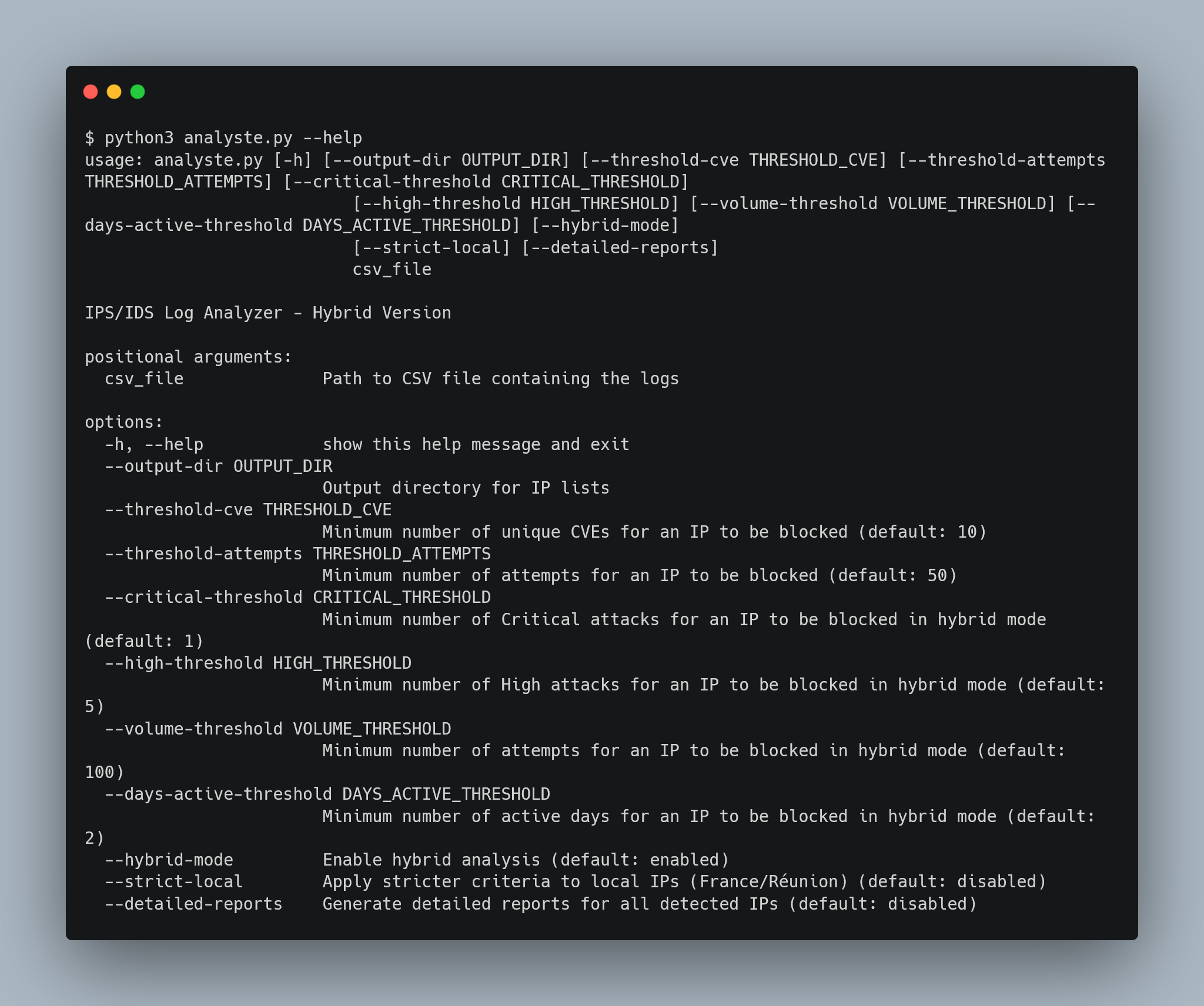

Configuration & Parameters

Command-Line Interface

The tool provides a comprehensive help system:

Essential Thresholds

--threshold-cve N: How many different vulnerabilities an IP must target for CVE approach (default: 10)--threshold-attempts N: How many total attack attempts trigger inclusion for Volume approach (default: 50)--critical-threshold N: How many critical-severity attacks trigger hybrid approach inclusion (default: 1)--strict-local: Optional flag to apply different standards for local vs international IPs in hybrid approach

Hybrid Approach Advanced Tuning

--high-threshold N: Minimum high-severity attacks for hybrid inclusion (default: 5)--volume-threshold N: Volume threshold for hybrid analysis (default: 100)--days-active-threshold N: Minimum persistence days for hybrid analysis (default: 2)

Output Control

--output-dir DIR: Where to save generated reports and block lists--detailed-reports: Generate comprehensive reports for all detected IPs (not just top 10)

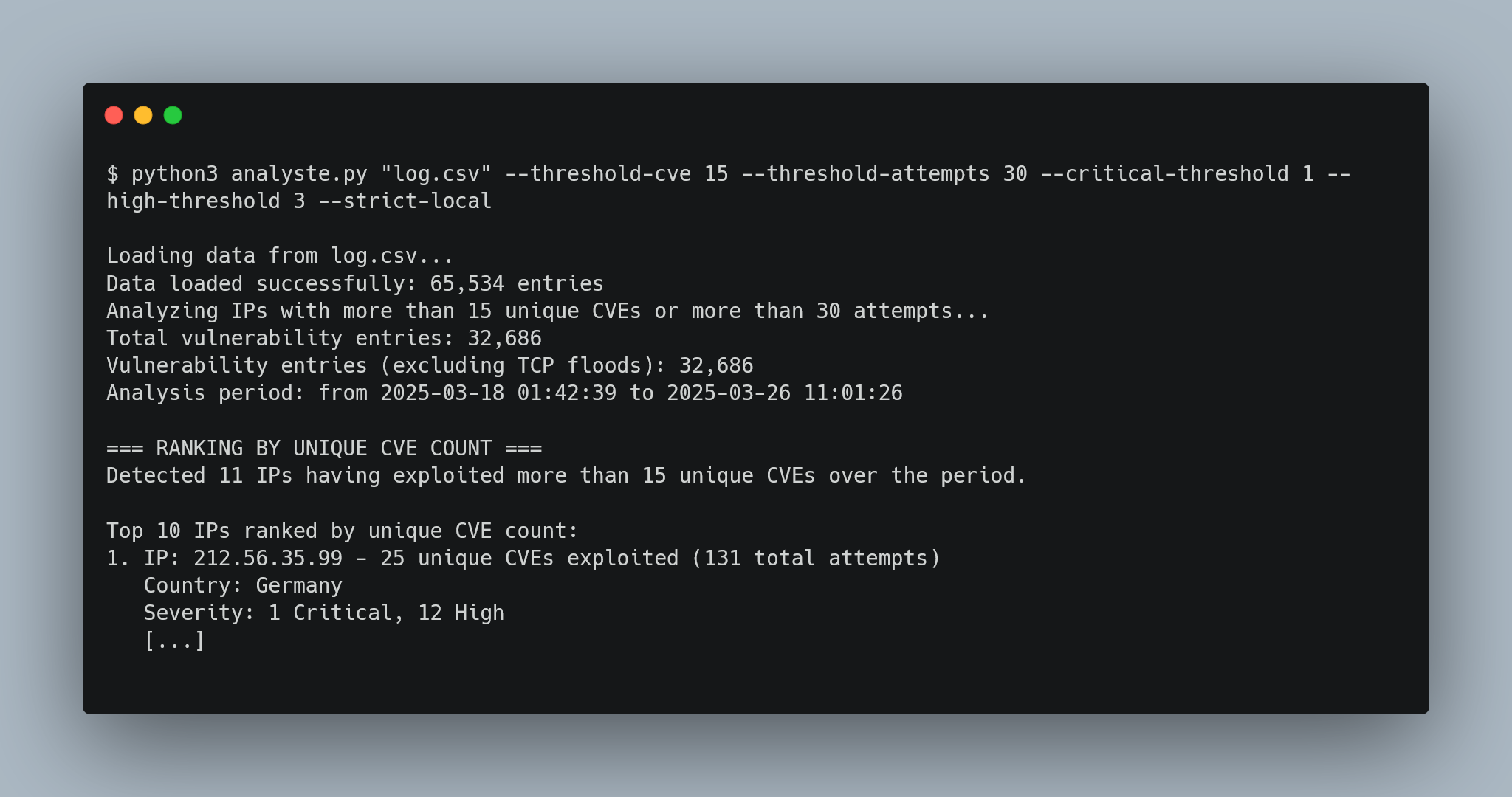

Real-World Execution Example

Here’s the tool running with customized parameters:

This generates a partial ouput of the 3 approaches from the complete report available in the output directory:

Independent Report Generation

Each approach generates separate outputs:

CVE Approach Outputs

block_list_by_cve_[timestamp].txt- Detailed list with CVE countsblock_list_by_cve_simple_[timestamp].txt- Plain IP list for firewall importblock_list_by_cve_detailed_[timestamp].csv- Complete metadata export

Volume Approach Outputs

block_list_by_attempts_[timestamp].txt- Detailed list with attempt countsblock_list_by_attempts_simple_[timestamp].txt- Plain IP listblock_list_by_attempts_detailed_[timestamp].csv- Complete metadata export

Hybrid Approach Outputs

block_list_hybrid_[timestamp].txt- Detailed list with trigger criteriablock_list_hybrid_simple_[timestamp].txt- Plain IP listblock_list_hybrid_detailed_[timestamp].csv- Complete metadata export

Security teams can implement one, two, or all three approaches based on their specific operational requirements and risk tolerance. The final decision to implement blocking rules remains with the security operations team.

Operational Insights & Results

Cross-Algorithm Analysis

The system analyzes intersections between approaches, providing valuable insights for security operations:

- IPs present in all three lists represent the most serious threats - These require immediate attention as they exhibit multiple threat indicators

- IPs only in hybrid approach are often more subtle attacks - Advanced threats that evade simple volume or diversity metrics

- IPs with numerous Critical alerts should be prioritized for blocking consideration - Highest risk regardless of other factors

Practical Experience Findings

From operational deployment, the CVE diversity approach proves most effective for detecting the most sophisticated and dangerous attackers, making it the priority method for addressing immediately critical threats. This approach consistently identifies advanced reconnaissance tools and professional penetration testing frameworks.

Geographic Intelligence Benefits

The differentiation between local and international sources addresses the core security challenge: blocking dangerous traffic while allowing legitimate business activity. Stricter criteria for local IPs (France/Réunion) prevent false positives from legitimate business operations, while standard sensitivity for international sources maintains security against unknown threats.

📊 Intelligence Outputs & Real-World Analysis

The tool transforms raw Palo Alto logs into actionable cyber threat intelligence, providing security teams with comprehensive analysis capabilities. Here’s what the system produces in real operational environments.

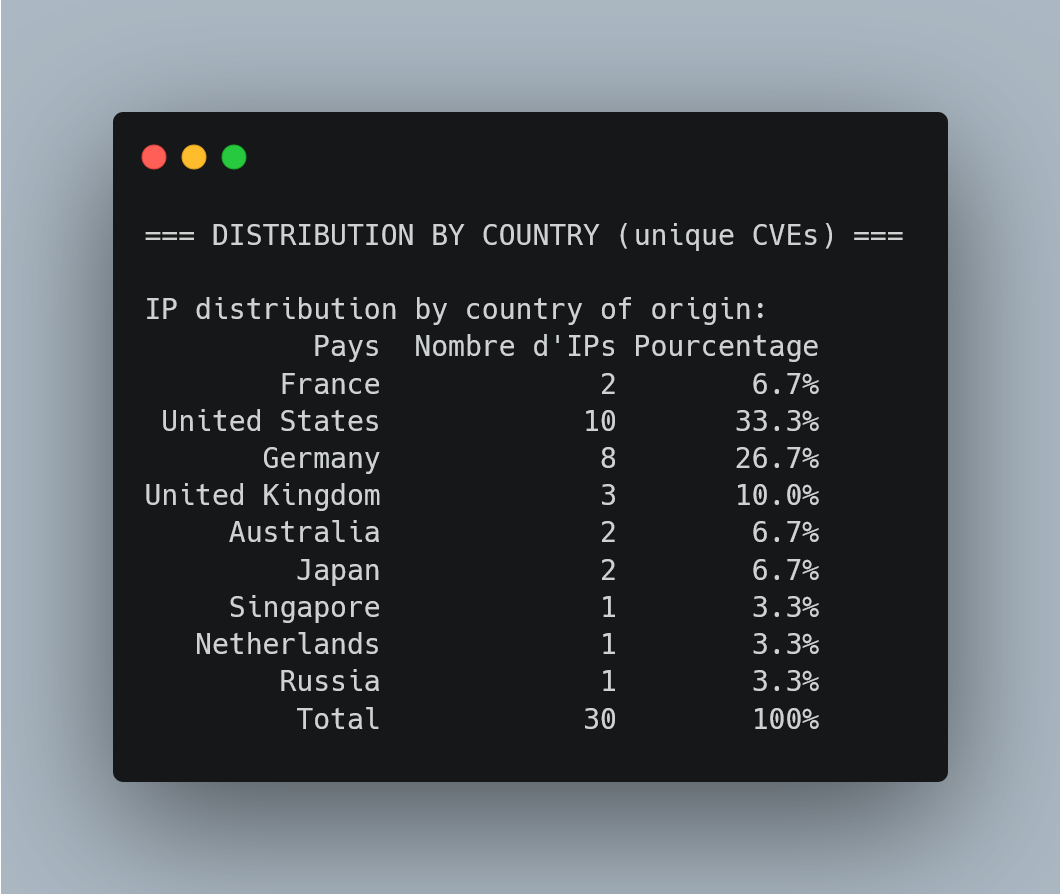

Geographic Threat Intelligence

The system provides immediate geographic context for strategic threat assessment:

Strategic Intelligence Value: 43.3% of sophisticated attacks (CVE diversity approach) originate from US infrastructure, with German sources representing 36.7%. This geographic distribution suggests potential use of compromised hosting services rather than direct nation-state activity, informing response strategies for Orange Réunion’s security team.

Automated Risk Classification & Severity Assessment

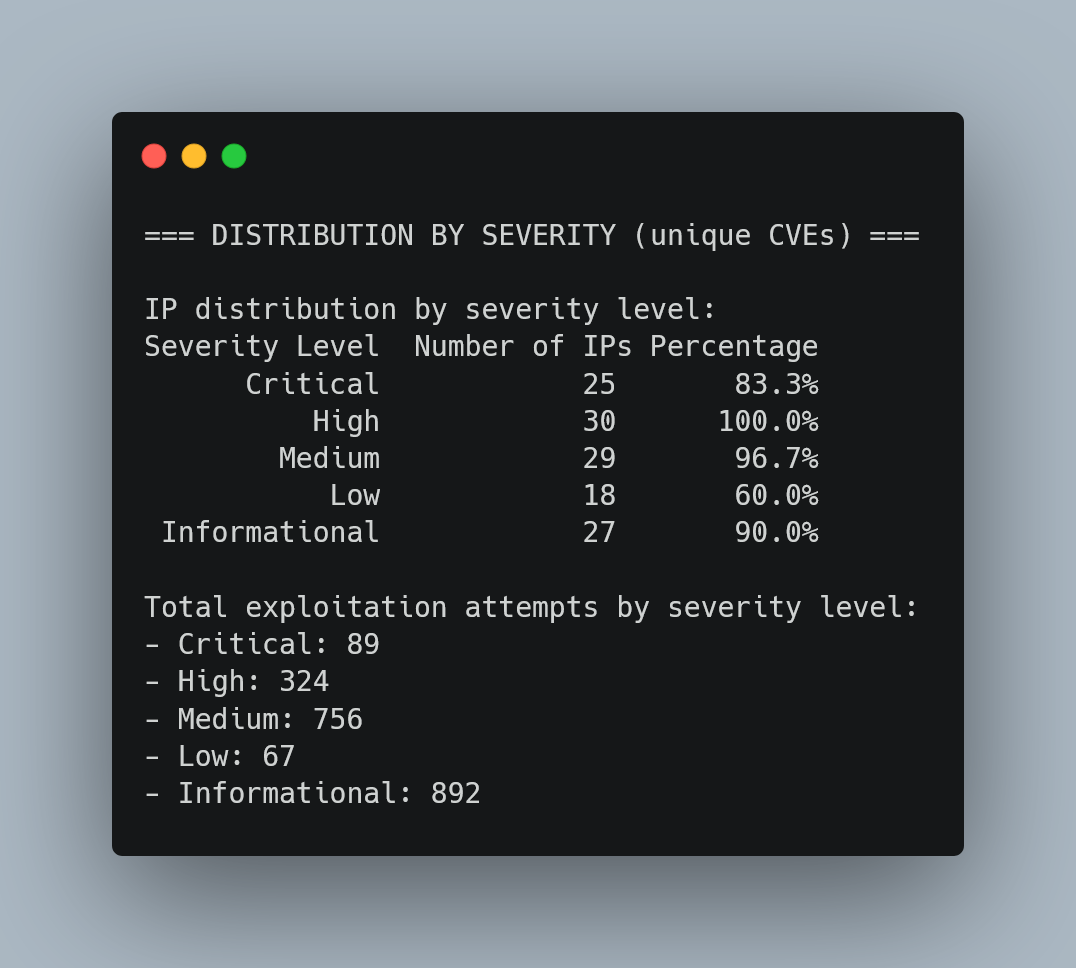

Risk Assessment Intelligence: 93.3% of detected sophisticated attackers attempt Critical-level exploits, with 100% targeting High-severity vulnerabilities. This data enables immediate risk prioritization for security operations.

Multi-Algorithm Detection Effectiveness

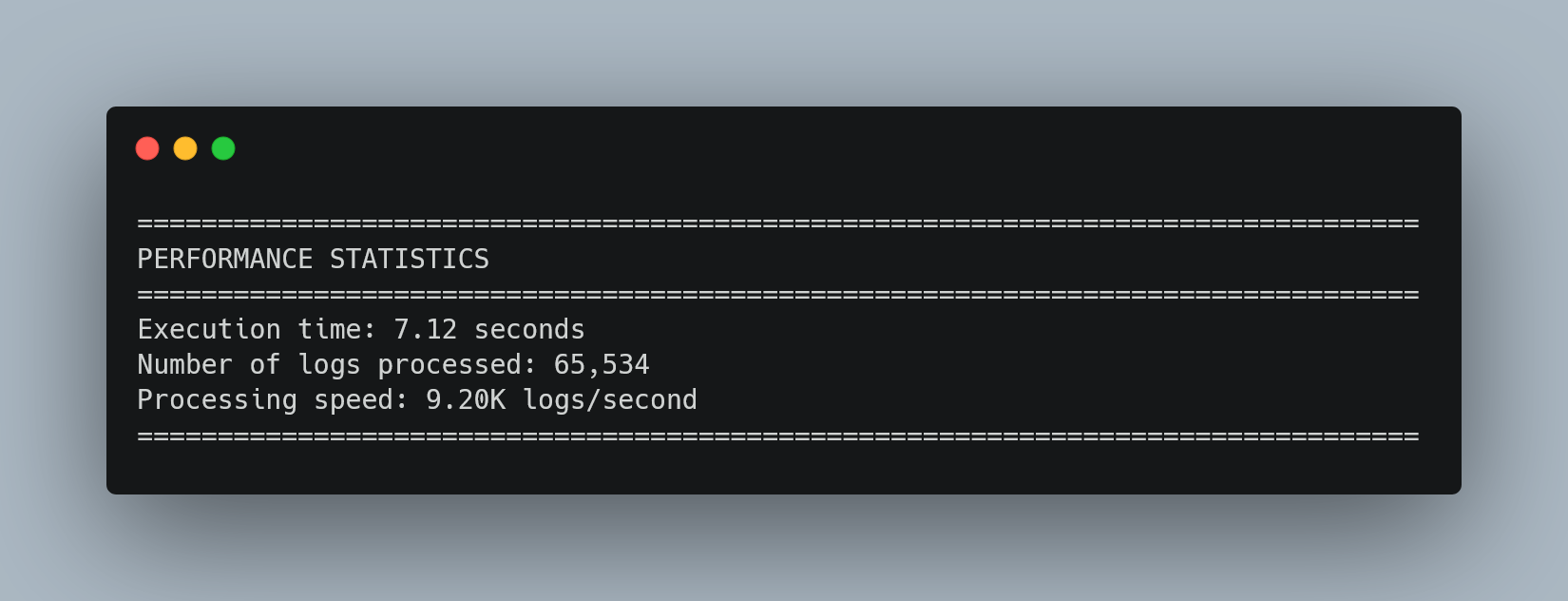

Performance Metrics

Scalability Validation: Processing 65,534 security events in 7.12 seconds (9,200 logs/second) demonstrates production-ready performance for threat analysis. This represents a massive time reduction from manual analysis while providing more comprehensive coverage.

🕵️ Forensic Evidence Packages

The system generates light forensic profiles for each detected threat, enabling immediate security decisions with evidentiary support. Here are real examples:

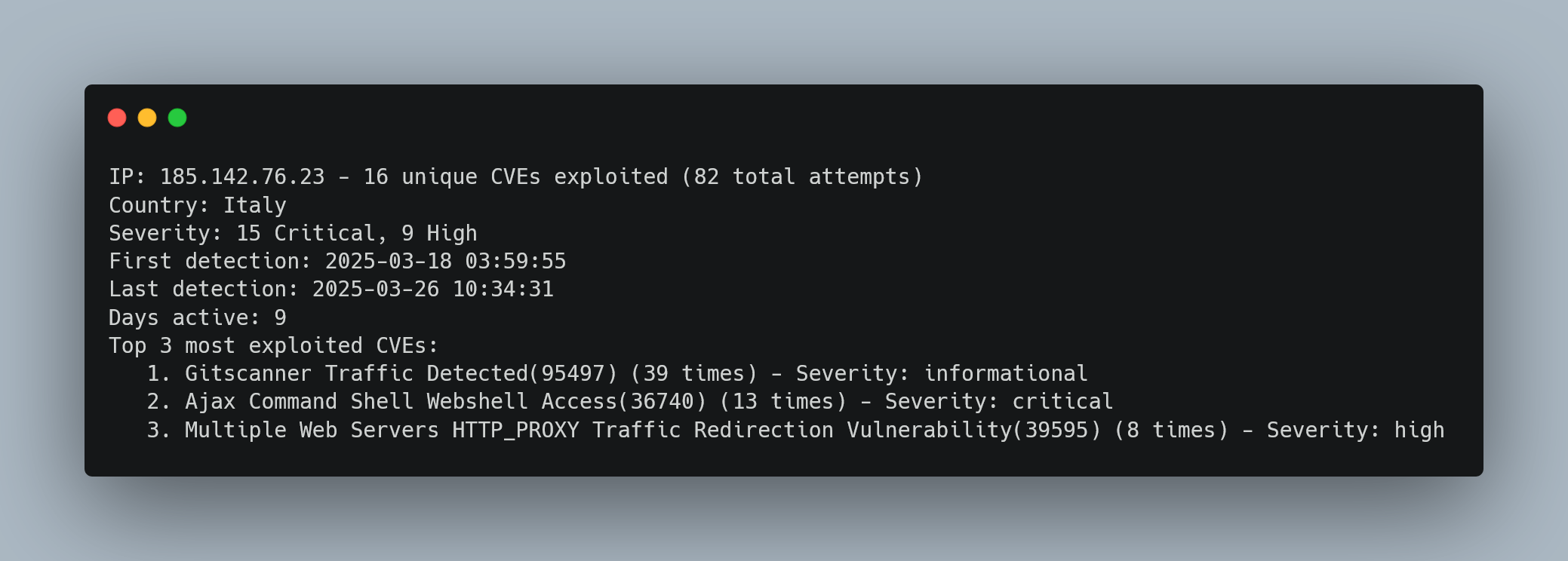

Advanced Persistent Reconnaissance Campaign

Human Forensic Analysis:

- Attack Pattern: Sophisticated multi-phase campaign spanning 9 days

- Phase 1 - Reconnaissance: Systematic infrastructure mapping (Gitscanner, 39 attempts)

- Phase 2 - Exploitation: Active webshell deployment attempts (Ajax Command Shell, 13 critical attempts)

- Phase 3 - Persistence: HTTP proxy redirection for lateral movement (8 high-severity attempts)

- Threat Level: Immediate blocking recommended - 15 Critical + 9 High severity attempts indicate active compromise attempts

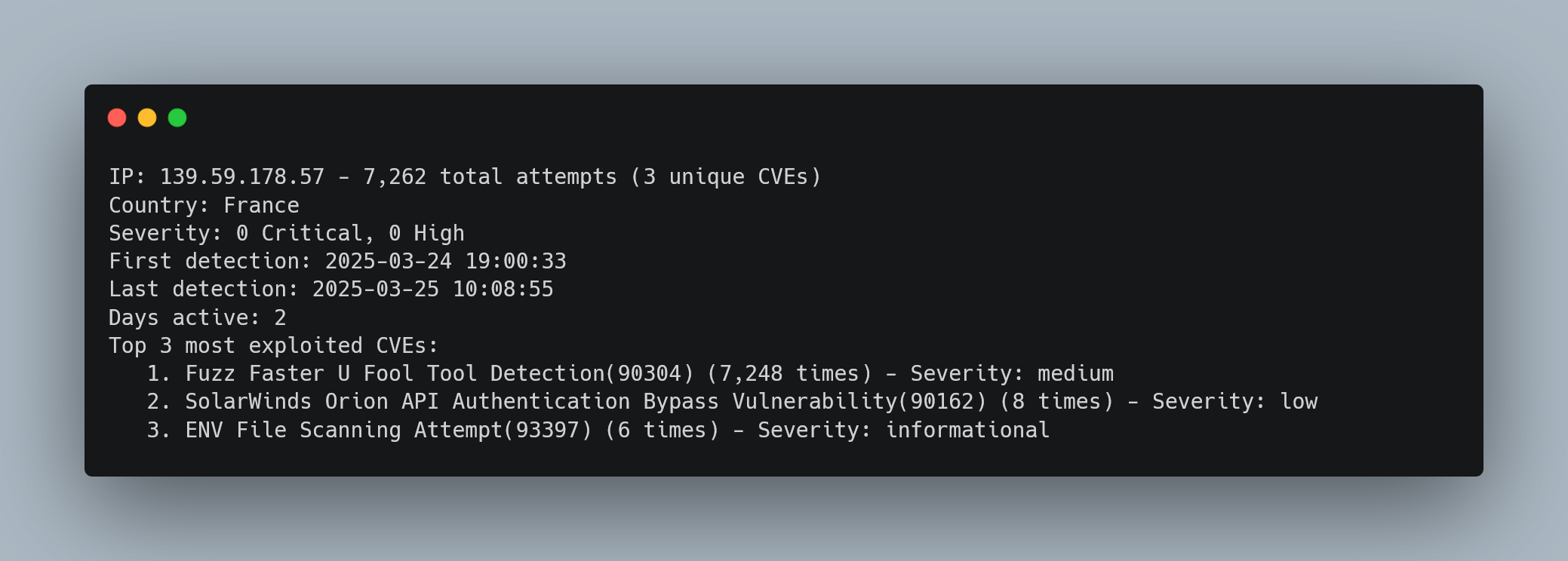

High-Volume Automated Attack Campaign

Human Forensic Analysis:

- Attack Pattern: Automated fuzzing campaign with 7,262 attempts in 48 hours

- Tool Signature: “Fuzz Faster U Fool” indicates professional penetration testing framework

- Geographic Context: Local French infrastructure requiring careful evaluation (potential legitimate security testing)

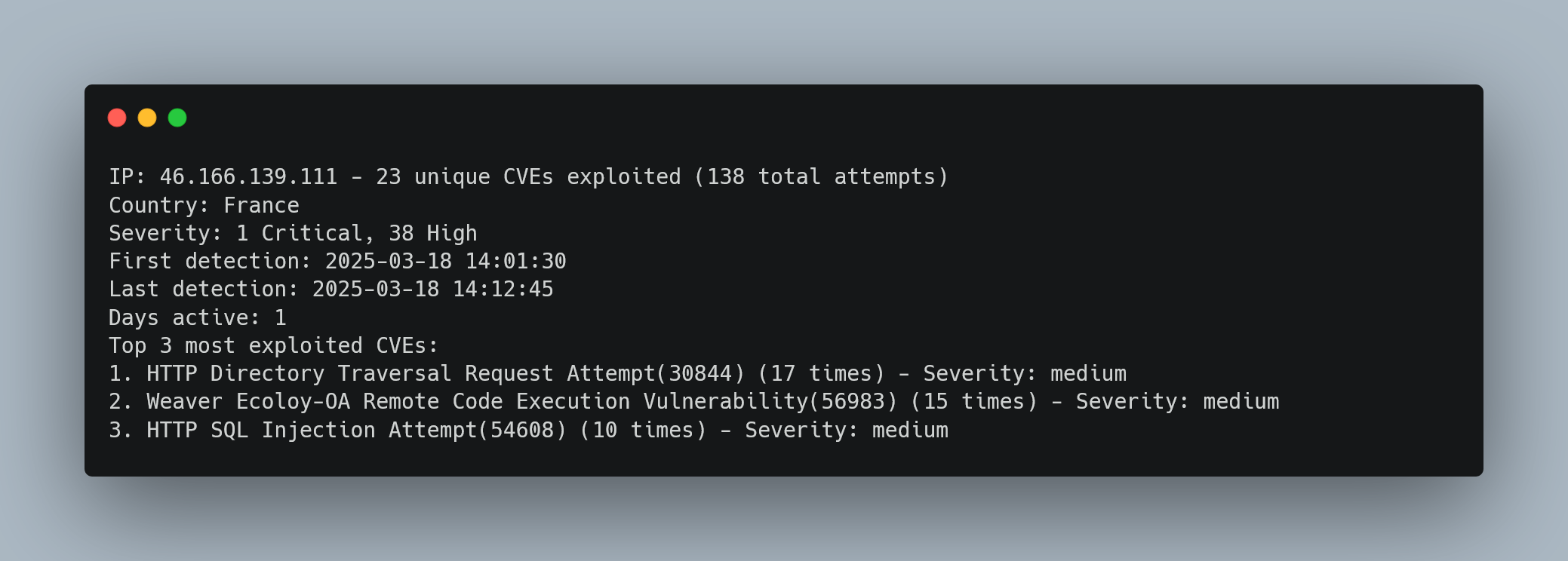

Local IP with Enhanced Scrutiny

Human Forensic Analysis:

- Geographic Intelligence: French source requiring

--strict-localevaluation - Concentrated Attack: 138 attempts in 11 minutes indicates automated exploitation tool

- Vulnerability Focus: Directory traversal + RCE + SQL injection suggests web application attack framework

- Business Context: Local source may indicate compromised French infrastructure or legitimate security testing requiring verification

🎯 Operational Intelligence Value

Immediate Decision Support

Each forensic package provides security teams with:

- Complete attack timelines for incident documentation

- Tool attribution through signature recognition

- Risk classification with severity-based prioritization

- Geographic context for threat source assessment

- Evidence packages for compliance and audit requirements

📊 Output & Intelligence Products

The system transforms raw security data into actionable outputs, much like how a security analysis team would provide different reports for different audiences and purposes.

Automated Block Lists (For Technical Teams)

Actionable IP-lists that firewalls and other network equipments can immediately understand and act upon.

- TXT Format: Text lists that can be directly imported into firewall rules via API or EDL for Palo Alto firewalls

- Detailed CSV: Complete case files with evidence and metadata for audit trails

- Categorized Lists: Separate lists per detection method, allowing security teams to apply different blocking strategies based on threat type

Executive Intelligence Reports (For Management)

These reports translate technical security data into business intelligence that executives and management can use for strategic decision-making.

- Geographic Threat Landscape: Where are attacks coming from? Are we seeing coordinated campaigns from specific regions?

- Severity Impact Assessment: What types of attacks are we facing? How serious are the threats to our business operations?

- Temporal Attack Analysis: When do attacks happen? Are we seeing sustained campaigns or opportunistic probes?

- Comparative Effectiveness: Which detection methods are most valuable? How do our different defenses complement each other?

Here’s a sample of the threat intelligence report generated for the CVE approach:

================================================================================

DETAILED REPORT - CVE DIVERSITY APPROACH

Generated on 2025-07-12 13:55:41

================================================================================

EXECUTIVE SUMMARY

Detected 11 IPs having exploited multiple unique CVEs.

Analysis demonstrates sophisticated reconnaissance and exploitation attempts.

=== GEOGRAPHIC DISTRIBUTION (CVE diversity) ===

IP distribution by country of origin:

Country Number of IPs Percentage

Brazil 2 18.2%

India 2 18.2%

Netherlands 2 18.2%

South Korea 2 18.2%

Spain 1 9.1%

Canada 1 9.1%

Mexico 1 9.1%

Total 11 100.0%

=== SEVERITY DISTRIBUTION (CVE diversity) ===

IP distribution by severity level:

Severity Level Number of IPs Percentage

Critical 7 63.6%

High 10 90.9%

Medium 11 100.0%

Low 5 45.5%

Informational 9 81.8%

Total exploitation attempts by severity level:

- Critical: 36

- High: 122

- Medium: 588

- Low: 33

- Informational: 276

================================================================================

INDIVIDUAL IP THREAT PROFILES

================================================================================

1. IP: 194.67.226.170 – 25 unique CVEs exploited (131 total attempts)

Country: Italy

Severity: 1 Critical, 12 High

First detection: 2025-06-17 09:30:58

Last detection: 2025-06-25 11:26:09

Days active: 9

Top exploited CVEs:

1. Gitscanner Traffic Detected(95497) (39 times) - Severity: informational

2. HTTP SQL Injection Attempt(54608) (11 times) - Severity: medium

3. HTTP SQL Injection Attempt(91528) (10 times) - Severity: medium

4. HTTP SQL Injection Attempt(38195) (9 times) - Severity: medium

5. HTTP SQL Injection Attempt(35823) (7 times) - Severity: medium

6. HTTP SQL Injection Attempt(94307) (6 times) - Severity: medium

7. HTTP SQL Injection Attempt(36242) (6 times) - Severity: medium

8. HTTP SQL Injection Attempt(36241) (5 times) - Severity: medium

9. HTTP SQL Injection Attempt(58005) (5 times) - Severity: low

10. HTTP /etc/passwd Access Attempt(35107) (4 times) - Severity: high

Complete list of 25 unique CVEs:

- Nmap Aggressive Option Print Detection(94318)

- ENV File Scanning Attempt(93397)

- HTTP SQL Injection Attempt(58005)

- HTTP SQL Injection Attempt(30514)

- Novell GroupWise Messenger Accept Language Header Overflow Vulnerability(30147)

- HTTP SQL Injection Attempt(91528)

- HTTP Directory Traversal Vulnerability(54701)

- HTTP SQL Injection Attempt(36241)

- HTTP SQL Injection Attempt(33338)

- HTTP Cross Site Scripting Attempt(32658)

- HTTP OPTIONS Method(30520)

- HTTP SQL Injection Attempt(38195)

- HTTP SQL Injection Attempt(59128)

- HTTP SQL Injection Attempt(91523)

- HTTP SQL Injection Attempt(36242)

- Suspicious or malformed HTTP Referer field(35554)

- HTTP /etc/passwd Access Attempt(35107)

- HTTP SQL Injection Attempt(54608)

- Generic HTTP Cross Site Scripting Attempt(31477)

- HTTP SQL Injection Attempt(35823)

- Multiple Web Servers HTTP_PROXY Traffic Redirection Vulnerability(39595)

- HTTP SQL Injection Attempt(57735)

- Apache Shiro Improper Authentication Vulnerability(58132)

- HTTP SQL Injection Attempt(94307)

- Gitscanner Traffic Detected(95497)

--------------------------------------------------------------------------------

2. IP: 82.93.156.224 – 23 unique CVEs exploited (135 total attempts)

Country: Mexico

Severity: 1 Critical, 17 High

First detection: 2025-06-14 21:51:04

Last detection: 2025-06-22 18:44:17

Days active: 8

Top exploited CVEs:

1. Gitscanner Traffic Detected(95497) (24 times) - Severity: informational

2. HTTP SQL Injection Attempt(54608) (12 times) - Severity: medium

3. HTTP SQL Injection Attempt(38195) (11 times) - Severity: medium

4. HTTP SQL Injection Attempt(91528) (11 times) - Severity: medium

5. HTTP SQL Injection Attempt(94307) (10 times) - Severity: medium

6. HTTP SQL Injection Attempt(36242) (9 times) - Severity: medium

7. HTTP SQL Injection Attempt(35823) (8 times) - Severity: medium

8. Multiple Web Servers HTTP_PROXY Traffic Redirection Vulnerability(39595) (7 times) - Severity: high

9. HTTP SQL Injection Attempt(36241) (6 times) - Severity: medium

10. HTTP /etc/passwd Access Attempt(35107) (5 times) - Severity: high

Complete list of 23 unique CVEs:

- ENV File Scanning Attempt(93397)

- HTTP SQL Injection Attempt(58005)

- HTTP SQL Injection Attempt(30514)

- Novell GroupWise Messenger Accept Language Header Overflow Vulnerability(30147)

- HTTP SQL Injection Attempt(91528)

- Ajax Command Shell Webshell Access(36740)

- HTTP SQL Injection Attempt(36241)

- HTTP SQL Injection Attempt(33338)

- HTTP Cross Site Scripting Attempt(32658)

- HTTP SQL Injection Attempt(38195)

- HTTP SQL Injection Attempt(59128)

- HTTP SQL Injection Attempt(91523)

- HTTP SQL Injection Attempt(36242)

- Suspicious or malformed HTTP Referer field(35554)

- HTTP /etc/passwd Access Attempt(35107)

- HTTP SQL Injection Attempt(54608)

- Generic HTTP Cross Site Scripting Attempt(31477)

- HTTP SQL Injection Attempt(35823)

- Multiple Web Servers HTTP_PROXY Traffic Redirection Vulnerability(39595)

- HTTP SQL Injection Attempt(57735)

- HTTP SQL Injection Attempt(94307)

- Gitscanner Traffic Detected(95497)

- Possible HTTP Malicious Payload Detection(91206)

[...]

Here’s a sample IP block list generated - ready to be imported via CLI or API into any network security equipment:

185.220.103.7

94.102.49.190

46.166.139.111

195.154.181.207

159.203.158.90

178.32.216.105

139.59.178.57

104.248.134.23

167.172.89.45

188.166.23.199

142.93.201.56

Technical Documentation (For Security Analysts)

Comprehensive evidence packages that provide complete justification for every security decision, enabling informed analysis and continuous improvement.

- Evidence Packages: Complete forensic trail for each blocked IP, including what they did, when they did it, and why it was considered threatening

- Vulnerability Intelligence: Analysis of which security weaknesses are being most actively exploited, helping prioritize patching efforts

- System Performance Metrics: How well is our detection working? Are we missing threats or blocking legitimate users?

This multi-layered output approach ensures that everyone from network administrators to C-level executives gets the information they need in a format they can immediately understand and act upon.

🔮 Future Enhancement Roadmap

Phase 2: Full Automation

- API Integration: Direct connection to Palo Alto firewall APIs for real-time log ingestion

- Automated Blocking: Direct implementation of block lists via firewall APIs

- Continuous Monitoring: Real-time enhanced threat detection analysis and report

Phase 3: Advanced Analytics

- Machine Learning Integration: Behavioral analysis for threat detection

- Predictive Analytics: Attack campaign forecasting and trend analysis

- Threat Intelligence Feeds: External intelligence integration for enhanced context

🚀 Operational Impact & Results

Measurable Business Improvements

- Time Reduction: From 1-2 hours to <10 minutes for comprehensive analysis

- Scale Increase: From 5-10 IPs to 100+ IPs per analysis cycle

- Coverage Expansion: Multi-algorithm approach detecting previously missed threats

- False Positive Reduction: Geographic differentiation minimizing legitimate user impact

Organizational Transformation

- Process Standardization: Eliminated human variability in threat assessment

- Decision Support: Data-driven blocking with complete evidence packages

- Resource Reallocation: Security analysts freed for strategic initiatives

- Audit Readiness: Automated documentation meeting compliance requirements

🎯 Professional Development & Strategic Impact

Technical Competencies Demonstrated

- System Architecture Design: Conceived multi-algorithm detection engine structure and data flow

- Algorithm Logic Development: Designed detection criteria and decision trees for threat classification

- Requirements Engineering: Translated cybersecurity needs into technical specifications for AI implementation

- AI-Assisted Development: Strategic use of AI tools while maintaining architectural control and validation

- Cybersecurity Domain Expertise: Deep understanding of IPS/IDS systems and threat analysis workflows

- Integration Specification: Defined Palo Alto Networks threat log processing requirements and field mapping

Professional Skills Acquired

- Problem Identification: Recognition of operational bottlenecks and automation opportunities

- Stakeholder Communication: Technical presentations to management and operations teams

- Project Leadership: Independent conception, development, and delivery of production tools

- Innovation Methodology: Pioneered AI-assisted development approach for enterprise cybersecurity tools

Key Learning Outcomes

This project demonstrated how AI tools can effectively accelerate cybersecurity tool development when combined with domain expertise and proper validation processes. The experience highlighted the value of focusing on security logic and requirements rather than implementation mechanics.