🏷️ Tech Stack: Python • TouchDesigner • WeatherAPI • Mistral AI • Stable Diffusion • Claude Anthropic • RunwayML Gen-4 • Multi-API Integration

Methodologies: Creative Coding • AI Agent Orchestration • Data-Driven Storytelling • Generative Design

My Role: Complete pipeline design, AI agent development, multi-API integration, TouchDesigner visual composition

Experimental Project - Weekend Lab & Creative Exploration | 2025

🔍 TL;DR - Executive Impact

Question: How can weather data become a story? This experimental AI pipeline transforms live environmental data into emotionally rich video narratives through automated agent orchestration.

Innovation: Five specialized AI agents working in sequence - from raw weather API calls to cinematic video generation - creating “Data-Driven Visual Narratives” that make factual information emotionally engaging.

Technical Achievement: Full automation of a complex creative-technical pipeline involving 5 external APIs, 4 specialized AI models, and real-time visual composition.

Learning Laboratory: Strategic experimentation ground for mastering AI agent orchestration, multi-API automation, and advanced prompt engineering - skills directly transferable to enterprise automation challenges.

Result: Functional system generating contextualized visual stories from any global city’s weather in 3-5 minutes, demonstrating the creative potential of structured AI agent workflows.

🎯 Context & Creative Vision

From Generative Art to Cybersecurity

This project represents a strategic return to my generative art and creative visualization roots, with a specific objective: developing AI agent orchestration expertise before applying it to cybersecurity.

The Creative Challenge

How to transform cold factual data into captivating visual experiences that tell a story? How to fully automate this creative transformation?

Vision: Imagine a future where cybersecurity data could be transformed into real-time visual narratives, making system observability both informative and aesthetically engaging.

Learning Opportunity

- AI Agent Orchestration: Each pipeline step uses a different specialized AI agent

- Multi-API Integration: Simultaneous management of 5 different external APIs

- Advanced Prompt Engineering: Instruction optimization for each AI model

- Real-Time Composition: TouchDesigner mastery for final integration

TouchDesigner Orchestration Architecture

🚀 Skills Developed

This project serves as a comprehensive learning laboratory for advanced automation and AI orchestration:

AI Agent Orchestration:

- Multi-model coordination (Mistral, Claude, Stable Diffusion, RunwayML)

- Cross-agent prompt consistency and quality management

- Automated pipeline error handling and fallback strategies

Technical Integration:

- Multi-API integration and automation in Python

- Prompt Engineering for cross-modal consistency

- Real-time data transformation and visual composition using TouchDesigner

- Asynchronous workflow management and API rate limiting

Innovation Methodology:

- Creative-technical prototyping with generative AI

- Data-to-visual pipeline architecture design

- Rapid experimentation framework for testing AI automation concepts

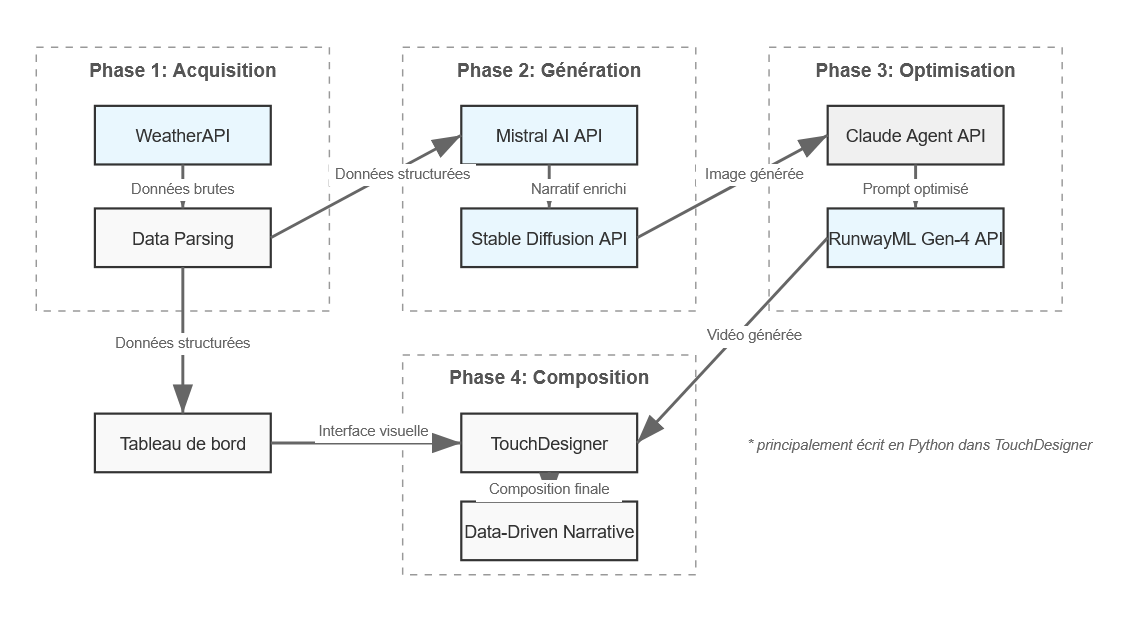

🏗️ AI Pipeline Architecture

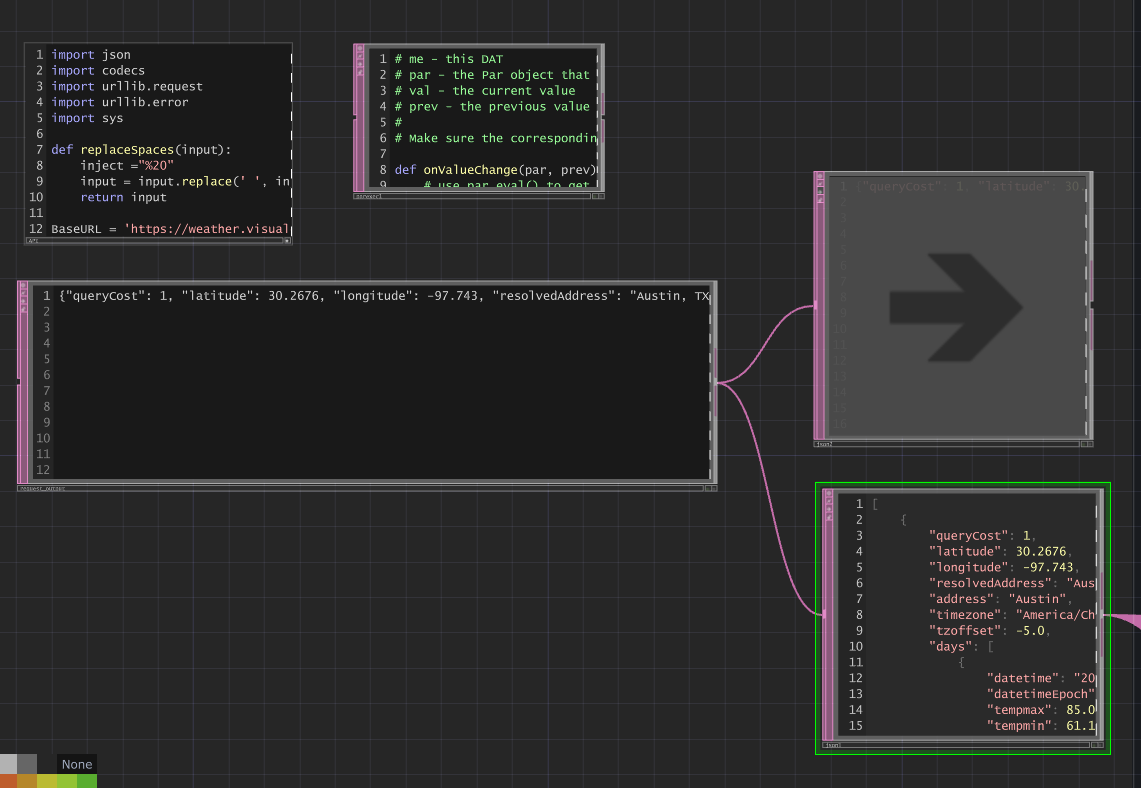

Phase 1: Data Acquisition

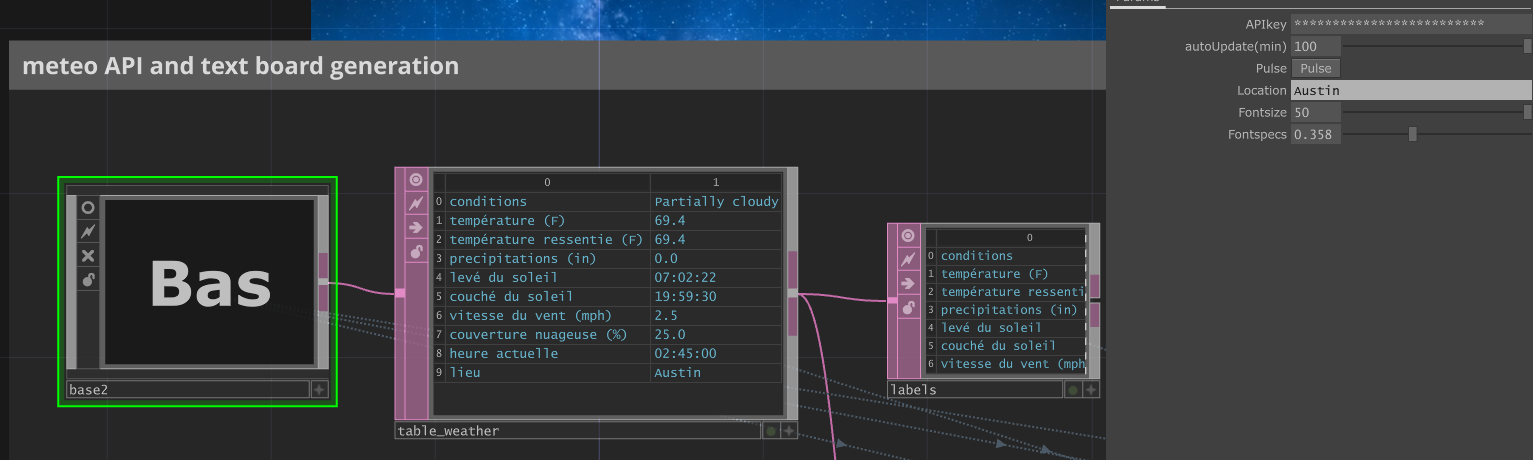

WeatherAPI + TouchDesigner Data Parsing

# Real-time weather data acquisition via VisualCrossing API

BaseURL = 'https://weather.visualcrossing.com/VisualCrossingWebServices/rest/services/timeline/'

Location = parent().par.Location.eval() # Dynamic location input

ApiQuery = BaseURL + replaceSpaces(Location)

# API parameters configuration

UnitGroup = 'us' # US units (Fahrenheit, mph)

ContentType = "json" # JSON response format

Include = "current" # Current conditions only

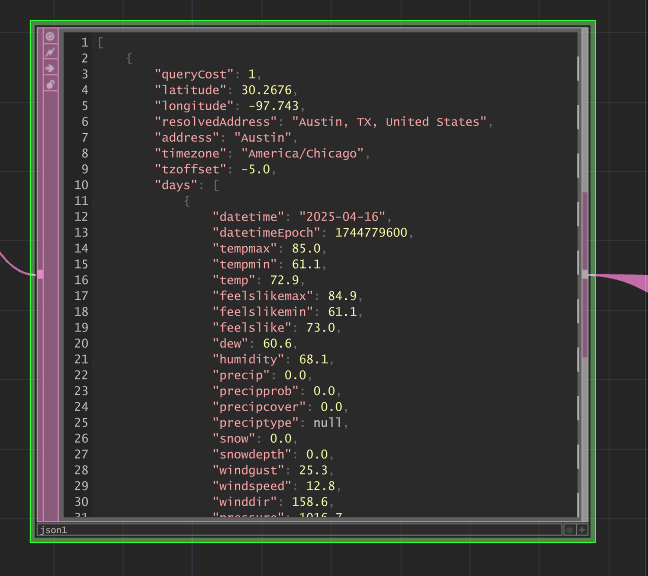

# Execute API call and parse response

data = urllib.request.urlopen(ApiQuery)

weatherData = json.loads(data.read().decode('utf-8'))

op('request_output').text = json.dumps(weatherData)

TouchDesigner Data Structure:

table_weather[0,1]→ Sky conditions (Clear, Cloudy, etc.)table_weather[1,1]→ Temperature (°F)table_weather[3,1]→ Precipitation (inches)table_weather[6,1]→ Wind speed (mph)table_weather[7,1]→ Cloud cover (%)table_weather[8,1]→ Current timetable_weather[9,1]→ Location (Austin, etc.)

Phase 2: Narrative Generation

Claude AI Agent → Stable Diffusion Optimization

Note: This section is currently being reworked. I primarily worked with MistralAI initially, but encountered stability issues in TouchDesigner. I’ve since switched to Claude for contextual prompt generation, though the dynamic weather data injection shown in the screenshot below is not yet fully re-implemented in the current version.

The Claude AI agent transforms raw weather data into enriched, cinematographically-optimized prompts for Stable Diffusion, with sophisticated prompt engineering for maximum visual quality.

Advanced Prompt Engineering System:

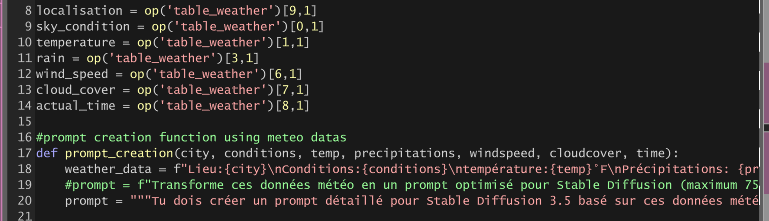

# Dynamic weather data injection from TouchDesigner

location = op('table_weather')[9,1] # Austin

sky_condition = op('table_weather')[0,1] # Clear

temperature = op('table_weather')[1,1] # 64.6°F

rain = op('table_weather')[3,1] # 0.0 inches

wind_speed = op('table_weather')[6,1] # 3.2 mph

cloud_cover = op('table_weather')[7,1] # 0% coverage

actual_time = op('table_weather')[8,1] # 03:45:00

user_message = f"""Create a detailed prompt for Stable Diffusion 3.5

based on this weather data from {location}, which will serve to generate

the opening image of a poetic film about love and solitude:

WEATHER DATA:

- Sky: {sky_condition} ({cloud_cover}% cloud coverage)

- Time: {actual_time}

- Temperature: {temperature}°F

- Wind: {wind_speed} mph

- Precipitation: {rain} in

- Location: {location}

NARRATIVE DIRECTIVE: The scene should evoke a cinematic atmosphere

that captures the essence of a love and solitude story in the city of {location}.

"""

Transformation Example:

Input: "Austin, Clear, 64.6°F, 3.2mph wind, 03:45 AM"

↓

Output: "Cinematic wide shot in style of Terrence Malick, establishing shot of Austin

at 3:45 AM with partially cloudy sky (30% coverage), a solitary figure stands on a

balcony overlooking the clear starry night, city lights twinkling below. Warm interior

light spills from an open door behind the silhouette. Anamorphic lens, shallow focus,

rich saturated colors, melancholic atmosphere suggesting longing and missed connections."

Claude Response Storage: op('Claude_agent_API_request_output_for_stable').text

Phase 3: Visual Generation

Stable Diffusion Ultra API + Post-Processing

Production-grade image generation with automatic post-processing pipeline optimized for RunwayML compatibility.

# Stable Diffusion Ultra API call

apicle = 'CLAUDE_API_KEY'

original_path = "generated_images/lighthouse.jpeg"

response = requests.post(

f"https://api.stability.ai/v2beta/stable-image/generate/ultra",

headers={

"authorization": f"Bearer {apicle}",

"accept": "image/*"

},

files={"none": ''},

data={

"prompt": op('Claude_agent_API_request_output_for_stable').text,

"output_format": "jpeg",

"negative_prompt": "cartoon, anime, illustration, drawing, painted, sketch, digital art, 3d render",

"seed": 0,

"style_preset": "photographic"

}

)

# Automated post-processing for RunwayML compatibility

if response.status_code == 200:

# Save original high-resolution image

with open(original_path, 'wb') as file:

file.write(response.content)

# Resize to 768x768 for optimal RunwayML processing

img = cv2.imread(original_path)

resized_img = cv2.resize(img, (768, 768), interpolation=cv2.INTER_LANCZOS4)

# Save resized version for video generation

resized_path = "generated_images/lighthouse_768x768.jpeg"

cv2.imwrite(resized_path, resized_img)

# Store base64 data in TouchDesigner

op('request_Output_base64').text = response.content

Technical Optimizations:

- Ultra quality model for maximum visual fidelity

- Negative prompting to ensure photographic realism

- Automatic resizing to 768x768 (RunwayML optimal resolution)

- LANCZOS4 interpolation for best quality scaling

- Dual storage (file + TouchDesigner TEXT DAT)

Phase 4: Animation & Optimization

Claude Cinematic Agent → RunwayML Gen-4

A specialized Claude agent analyzes the generated image and creates cinematographically-optimized animation prompts specifically tailored for RunwayML Gen-4’s requirements.

Advanced Claude Animation Agent:

def analyze_and_create_runway_prompt(image_path):

# Encode image for Claude vision analysis

base64_image = encode_image(image_path)

# Specialized system prompt for RunwayML optimization

system_prompt = """

You are an expert in creating prompts for RunwayML Gen-4, an AI model

that transforms static images into videos.

### DIRECTIVES FOR CREATING RUNWAYML GEN-4 PROMPT ###

## STRUCTURE (in this precise order) ##

1. SUBJECT MOVEMENT: How the main character(s) or object(s) should move

- Use generic terms like "the subject", "the woman", "the man", etc.

- Examples: "the subject slowly turns their head", "the woman walks along the street"

2. SCENE MOVEMENT: How the environment reacts or moves

- Animate environment elements identified in the image

- Examples: "leaves swirl in the wind", "rain creates ripples"

3. CAMERA MOVEMENT: How the camera moves

- Options: locked (static), handheld (shoulder camera), dolly, pan, tracking, etc.

- Examples: "camera slowly follows the subject", "static camera with slight shake"

4. STYLE DESCRIPTORS: General movement style

- Examples: "cinematic", "live-action", "slow motion"

## CRITICAL RULES ##

- Stay SIMPLE and DIRECT: 30-75 words maximum

- Describe ONLY the MOVEMENT, not what's already visible in the image

- Use ONLY POSITIVE formulations (never "without", "no", etc.)

- Avoid conversational language and commands

"""

# Claude API call with image analysis

response = client.messages.create(

model="claude-3-7-sonnet-20250219",

system=system_prompt,

max_tokens=150,

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "Analyze this image and create a RunwayML Gen-4 prompt to animate it coherently."},

{"type": "image", "source": {"type": "base64", "media_type": "image/jpeg", "data": base64_image}}

]

}]

)

runway_prompt = response.content[0].text.strip()

return runway_prompt

# Update TouchDesigner output

op('Claude_agent_API_request_output_for_runwayML').text = runway_prompt

Example Claude Output:

Input Image: Silhouette on Austin balcony at night

↓

Claude Analysis: "the silhouette slowly leans forward on the balcony railing. clouds drift

across the golden sunset sky. light shifts on the brick building surface. camera locked

with subtle zoom. cinematic."

Phase 5: Video Generation & Polling

RunwayML Gen-4 API + Asynchronous Management

# RunwayML video generation with polling system

from runwayml import RunwayML

import runwayml

# Initial video generation request

client = RunwayML(api_key=os.environ.get("RUNWAYML_API_SECRET"))

image_path = "generated_images/lighthouse_768x768.jpeg"

# Task creation (not shown in provided code - inferred from ID polling)

# task = client.image_to_video.create(image=image_path, prompt=claude_optimized_prompt)

# Asynchronous task polling

id = op('runwayml_API_ID_output').text # Task ID from initial request

task = client.tasks.retrieve(id=id)

# Status management and result extraction

if task.status == 'SUCCEEDED' and task.output and len(task.output) > 0:

video_url = task.output[0]

print(f"Video URL: {video_url}")

op('runwayml_ID_endpoint_response').text = video_url

else:

print(f"Task status: {task.status}")

op('runwayml_ID_endpoint_response').text = f"Status: {task.status}, no URL available"

Phase 6: Interactive Composition

TouchDesigner Real-Time Integration

TouchDesigner serves as the central nervous system, orchestrating all pipeline components through its visual node graph:

Data Flow Architecture:

- Weather Interface: Real-time location input and parameter display

- API Orchestration: Each API call managed through dedicated TEXT DAT operators

- State Management: Pipeline progress tracked through operator connections

- Visual Composition: Final video integration with weather dashboard overlay

- User Interaction: Dynamic location updates triggering complete regeneration

TouchDesigner Integration Benefits:

- Visual Debugging: Real-time monitoring of each pipeline stage

- Modular Architecture: Easy to modify or extend individual components

- Performance Optimization: Parallel processing capabilities for multiple requests

- Creative Flexibility: Real-time effects and composition adjustments

🔄 Use Case Transposition

Why Weather → Why Not Security Data?

This weather pipeline demonstrates a replicable architecture pattern for transforming any real-time data into engaging visual narratives:

Current Input: Weather APIs (temperature, conditions, wind speed) Future Input: Security logs, threat feeds, network telemetry

Current Output: Cinematic videos reflecting weather mood

Future Output: Visual incident narratives, threat story dashboards

Transferable Architecture Patterns

# Generic pattern demonstrated:

raw_data → context_enrichment → visual_generation → animation → composition

# Weather application:

weather_api → narrative_prompt → styled_image → video → dashboard

# Potential security application:

security_logs → threat_context → risk_visualization → animated_alerts → SOC_display

Skills Directly Transferable:

- Agent orchestration → Multi-layer security analysis pipelines

- Prompt engineering → Optimized instructions for security AI agents

- Creative visualization → Engaging SOC dashboards and alert systems

- Multi-API coordination → Integration of heterogeneous security tools

This creative experiment builds the exact technical foundation needed for next-generation security observability - where complex data becomes instantly interpretable through automated visual storytelling.

🤖 Advanced AI Agent Orchestration

Agent 1: Claude Narrative Agent (Contextual Narrator)

# Specialization: Weather data → Cinematic narrative prompts

# Location: Claude_agent_API_request1 (TouchDesigner TEXT DAT)

system_prompt = """

Create a detailed prompt for Stable Diffusion 3.5 based on this weather data...

PROMPTING GUIDE FOR STABLE DIFFUSION 3.5:

1. STYLE: Always clearly specify the visual style at the beginning of the prompt

2. SUBJECT AND ACTION: Describe the main subject before any action it performs

3. COMPOSITION AND FRAMING: Use precise cinematographic terms

4. LIGHTING AND COLOR: Describe light quality and palette

5. TECHNICAL PARAMETERS: Add cinematography details

"""

# Dynamic weather injection from TouchDesigner table_weather

weather_context = {

'location': op('table_weather')[9,1],

'conditions': op('table_weather')[0,1],

'temperature': op('table_weather')[1,1],

'wind_speed': op('table_weather')[6,1],

'time': op('table_weather')[8,1]

}

Role: Transform factual weather data into rich, emotionally resonant cinematic prompts optimized for Stable Diffusion generation.

Agent 2: Stable Diffusion Ultra (Visual Generator)

# Specialization: Prompt → High-quality photographic images

# API: stability.ai/v2beta/stable-image/generate/ultra

api_params = {

"prompt": op('Claude_agent_API_request_output_for_stable').text,

"output_format": "jpeg",

"negative_prompt": "cartoon, anime, illustration, drawing, painted, sketch, digital art, 3d render",

"seed": 0,

"style_preset": "photographic"

}

# Automatic post-processing pipeline

post_processing = {

"original_resolution": "high_quality_generation",

"runwayml_optimization": "768x768_resize",

"interpolation": "cv2.INTER_LANCZOS4",

"storage": "dual_file_and_touchdesigner"

}

Role: Generate photorealistic, cinematographic-quality images that capture the emotional essence of the weather narrative.

Agent 3: Claude Vision Agent (Cinematographic Optimizer)

# Specialization: Image analysis → RunwayML animation prompts

# Location: Claude_agent_API_request_output_for_runwayML

specialized_instructions = {

"image_analysis": "base64_vision_input",

"movement_categories": ["SUBJECT", "SCENE", "CAMERA", "STYLE"],

"output_constraints": "30-75_words_maximum",

"language_rules": "positive_formulations_only",

"technical_terms": ["locked", "handheld", "dolly", "pan", "tracking"]

}

vision_analysis = """

Analyze this image and create a RunwayML Gen-4 prompt to animate it coherently.

Focus on movement that enhances the emotional narrative without breaking visual coherence.

"""

Role: Computer vision analysis specialist creating optimized animation instructions that maintain narrative coherence.

Agent 4: RunwayML Gen-4 (Professional Animator)

# Specialization: Image + Prompt → Cinematic video sequences

# API: RunwayML Gen-4 with asynchronous polling

workflow = {

"input_image": "768x768_optimized_jpeg",

"animation_prompt": "claude_vision_optimized",

"generation_model": "gen-4_professional",

"output_format": "mp4_10_seconds",

"polling_system": "asynchronous_task_monitoring"

}

# Asynchronous task management

task_status_flow = {

"PENDING": "generation_in_progress",

"SUCCEEDED": "extract_video_url",

"FAILED": "error_handling_and_retry"

}

Role: Transform static images into dynamic, emotionally engaging video sequences that bring the weather narrative to life.

Agent Coordination Protocol

# TouchDesigner orchestration flow

pipeline_sequence = [

"weather_api → table_weather",

"table_weather → claude_narrative_agent",

"claude_output → stable_diffusion_api",

"sd_image → claude_vision_agent",

"claude_vision → runwayml_generation",

"runwayml_video → touchdesigner_composition"

]

# Error handling and state management

error_recovery = {

"api_timeout": "retry_with_exponential_backoff",

"quota_exceeded": "queue_for_later_execution",

"invalid_response": "fallback_to_previous_step",

"quality_threshold": "regenerate_with_adjusted_prompts"

}

🎬 Results & Creative Examples

System Performance

- Total generation time: ~3-5 minutes per video

- Simultaneous APIs: 5 coordinated external services

- Final resolution: 1920x1080, 5-second video

- Visual quality: Narrative coherence maintained throughout the chain

🔧 Advanced Technical Challenges & Solutions

TouchDesigner as Central Nervous System

Challenge: Orchestrating 5 different APIs through a visual programming environment while maintaining state consistency and error handling.

Solution - Hub Architecture:

# TouchDesigner TEXT DAT operators serve as API endpoints

api_endpoints = {

"weather_input": "table_weather", # Structured weather data

"narrative_output": "Claude_agent_API_request_output_for_stable", # SD-optimized prompts

"image_storage": "request_Output_base64", # Base64 image data

"animation_prompts": "Claude_agent_API_request_output_for_runwayML", # RunwayML prompts

"video_urls": "runwayml_ID_endpoint_response", # Final video URLs

"task_ids": "runwayml_API_ID_output" # RunwayML task tracking

}

# Visual node graph enables real-time monitoring

data_flow_visualization = {

"weather_parsing": "visual_table_display",

"api_call_status": "color_coded_connections",

"error_states": "red_connection_indicators",

"processing_progress": "animated_data_flow"

}

Innovation: TouchDesigner transforms from a creative tool into a production-grade orchestration platform with visual debugging capabilities.

Sophisticated Prompt Engineering Chain

Challenge: Maintaining narrative coherence through 4 AI model transformations while optimizing for each model’s specific requirements.

Solution - Specialized Prompt Architecture:

# Claude Narrative Agent - Weather to Story Context

narrative_system_prompt = """

PROMPTING GUIDE FOR STABLE DIFFUSION 3.5:

1. STYLE: Always clearly specify the visual style at the beginning of the prompt

2. SUBJECT AND ACTION: Describe the main subject before any action

3. COMPOSITION AND FRAMING: Use precise cinematographic terms

4. LIGHTING AND COLOR: Describe light quality and palette

5. TECHNICAL PARAMETERS: Add cinematography details

"""

# Claude Vision Agent - Image to Animation Optimization

animation_system_prompt = """

STRUCTURE (in this precise order):

1. SUBJECT MOVEMENT: How the character(s) should move

2. SCENE MOVEMENT: How the environment reacts or moves

3. CAMERA MOVEMENT: locked, handheld, dolly, pan, tracking

4. STYLE DESCRIPTORS: cinematic, live-action, slow motion

CRITICAL RULES:

- 30-75 words maximum

- POSITIVE formulations only

- Describe ONLY the MOVEMENT

"""

# Context injection for consistency

weather_context_injection = {

"original_weather": "maintained_through_all_transformations",

"location_consistency": "city_name_preserved_in_prompts",

"atmospheric_coherence": "weather_mood_reflected_in_animation",

"temporal_accuracy": "time_of_day_consistent_across_pipeline"

}

Asynchronous Video Generation Management

Challenge: RunwayML Gen-4 requires asynchronous polling with variable generation times (30 seconds to 5 minutes).

Solution - Polling Architecture:

# Asynchronous task lifecycle management

async def runway_video_pipeline():

# Phase 1: Task submission

task_creation = {

"image_input": "768x768_optimized_jpeg",

"prompt_input": "claude_vision_optimized",

"api_response": "task_id_for_polling"

}

# Phase 2: Status polling with exponential backoff

polling_strategy = {

"initial_delay": "30_seconds",

"max_attempts": "20_iterations",

"backoff_multiplier": "1.2x_per_attempt",

"status_options": ["PENDING", "PROCESSING", "SUCCEEDED", "FAILED"]

}

# Phase 3: Result extraction and error handling

result_management = {

"success": "extract_video_url_to_touchdesigner",

"failure": "log_error_and_trigger_retry",

"timeout": "mark_as_failed_after_10_minutes"

}

# TouchDesigner integration

op('runwayml_API_ID_output').text = task_id

op('runwayml_ID_endpoint_response').text = video_url_or_status

Production-Grade Image Processing Pipeline

Challenge: Ensure optimal image quality and format compatibility between Stable Diffusion and RunwayML while maintaining processing speed.

Solution - Automated Post-Processing:

# Multi-stage image optimization

def optimize_for_video_generation(original_image_response):

# Stage 1: High-quality generation preservation

original_path = "generated_images/lighthouse.jpeg"

with open(original_path, 'wb') as file:

file.write(original_image_response.content)

# Stage 2: RunwayML optimization (768x768 is optimal)

img = cv2.imread(original_path)

resized_img = cv2.resize(img, (768, 768), interpolation=cv2.INTER_LANCZOS4)

# Stage 3: Quality preservation with LANCZOS4 interpolation

resized_path = "generated_images/lighthouse_768x768.jpeg"

cv2.imwrite(resized_path, resized_img)

# Stage 4: TouchDesigner integration

op('request_Output_base64').text = original_image_response.content

return {"original": original_path, "optimized": resized_path}

# Quality metrics and validation

quality_assurance = {

"resolution_check": "verify_768x768_dimensions",

"aspect_ratio": "maintain_1:1_for_runway_compatibility",

"file_size": "optimize_for_api_upload_limits",

"format_validation": "ensure_jpeg_compatibility"

}

Multi-API Error Handling & Resilience

Challenge: Coordinate 5 external APIs with different rate limits, response times, and failure modes.

Solution - Robust Error Management:

# API-specific error handling strategies

api_resilience_patterns = {

"weather_api": {

"timeout": "10_seconds",

"retry_attempts": "3",

"fallback": "cached_weather_data"

},

"claude_api": {

"rate_limit_handling": "exponential_backoff",

"token_management": "environment_variable_security",

"response_validation": "content_length_and_format_check"

},

"stable_diffusion": {

"quota_management": "usage_tracking",

"image_validation": "response_status_and_content_type",

"retry_logic": "different_seed_on_failure"

},

"runwayml": {

"async_polling": "task_id_based_status_checking",

"timeout_management": "10_minute_maximum_wait",

"result_validation": "video_url_accessibility_test"

}

}

# Global error recovery

def pipeline_error_recovery(error_stage, error_type):

recovery_strategies = {

"network_timeout": "retry_with_increased_timeout",

"quota_exceeded": "queue_for_later_execution",

"invalid_response": "log_and_skip_to_fallback",

"rate_limit": "implement_exponential_backoff"

}

return recovery_strategies.get(error_type, "manual_intervention_required")

🔮 Evolution Roadmap

Phase 2: Self-Improving Pipeline

# Automatic quality scoring system

quality_score = evaluate_video_quality(generated_video)

if quality_score < threshold:

regenerate_with_improved_prompts()

Objective: AI evaluation agent that scores visual coherence and automatically recreates until reaching a quality threshold.

Phase 3: Multi-Scene & Storyboard

- AI storyboard generation to create multi-sequence narratives

- Advanced video composition with transitions and effects

- Adaptive narratives based on weather history

Phase 4: Enterprise Data Applications

- Security log narratives: Incident stories with visual context

- Business intelligence storytelling: KPI data transformed into engaging reports

- Real-time operational dashboards: System health as visual narratives

Current Status: Functional prototype with 80% automated pipeline. Next milestone: implementation of 100% automation, quality self-evaluation system and exploration of cybersecurity data applications.

Learning Impact: First complete multi-agent AI orchestration experience, building the technical foundation for advanced automation in professional contexts.