🏷️ Tech Stack: Python • Webserver • Palo Alto Networks • EDL • GitLab CI • JSON APIs • Firewall • IPS/IDS • Network

Methodologies: DevSecOps • AI-Assisted Development • Threat Intelligence

My Role: Full design, development, deployment & documentation of the system, plus GitLab CI/Docker architecture planning and project management

Production Project - Developed during apprenticeship at Orange Reunion IP Network Department | 2024-2025

📋 TL;DR - Executive Summary

Problem: Legitimate Google crawlers were being blocked by newly deployed Palo Alto firewalls, potentially impacting advertising revenue and SEO. Manual management of Google’s 4 dynamic IP lists (via JSON APIs) took 1-2 hours weekly with error risks.

Solution: Python automation system using Palo Alto’s External Dynamic Lists (EDL) to automatically transform Google’s JSON APIs into firewall-consumable IP lists. Hybrid architecture: Python + webserver + GitLab for maximum reliability.

Technical Approach: AI-assisted development methodology, robust security (credential management, secure logging) and automated installation.

Learning: First extensive production experience with AI-assisted development methodology, learned more about the DevSecOps lifecycle.

Results: Successful production deployment, zero manual maintenance, complete audit trail, marketing revenue protection. Planned evolution to cloud-native architecture.

Impact: Business problem solved, transformation of a time-consuming manual process into a secure automated solution.

🚨 Business Context & Problem

New Firewall Infrastructure

The IP Network Department at Orange Reunion had recently completed the deployment of new Palo Alto firewalls infrastructure, enabling deep packet inspection and application-aware security policies across the OSI stack from network to application layer.

Problem : googlebot traffic blocked

During routine monitoring, the Digital Marketing team identified that Google crawlers were being blocked by the new Palo Alto firewalls.

- New firewall deployment with default restrictive policies - no crawler allowlists had been configured yet

- Legacy systems had no automation - previous firewalls were managed manually without any automated processes

- Fresh opportunity for modernization - this was the perfect occasion to implement intelligent automation

The Technical Challenge

The situation presented a complex technical challenge that went beyond simple IP list management:

- Google operates four separate IP ranges for different crawler types (main search, image crawlers, user-triggered fetchers, etc.)

- These IP ranges change dynamically without notification to website operators

- Google only publishes these IP ranges as JSON APIs, not in firewall-consumable formats

Business Impact & Opportunity

This technical discovery created both immediate business pressure and strategic opportunity:

Immediate Impact:

- Blocked Google crawlers affecting website indexing and SEO performance

- Potential revenue impact on Google Ads effectiveness and organic traffic

The core opportunity: Transform a simple firewall configuration issue into a comprehensive automation solution aligned with modern NetOps practices.

💡 AI-Assisted Development Methodology

Strategic AI Integration in Enterprise Development

Rather than spending months learning every Python technique from scratch, I used an AI-assisted development methodology for this project: using AI as a coding partner to accelerate implementation while I maintained complete control over security requirements, architecture decisions, and business logic.

Development Process

1. Requirements Engineering & Architecture (100% Human)

- Analyzed the business problem: blocked Googlebot crawlers impacting advertising revenue

- Designed the EDL-based solution architecture

- Specified security requirements and enterprise constraints

- Defined the dual-path approach (web server + GitLab integration)

- Developed initial code structure

2. AI-Powered Implementation (AI-Assisted)

- Converted my specifications and code into advanced Python code

- Generated setup scripts with robust error handling

- Implemented permission management for directories and webserver

- Created comprehensive documentation and troubleshooting guides

3. Iterative Validation & Refinement (Human-Led)

- Reviewed and tested all AI-generated code components

- Verified security implications and enterprise compliance

- Validated functionality and refined implementation

🎯 Solution Architecture

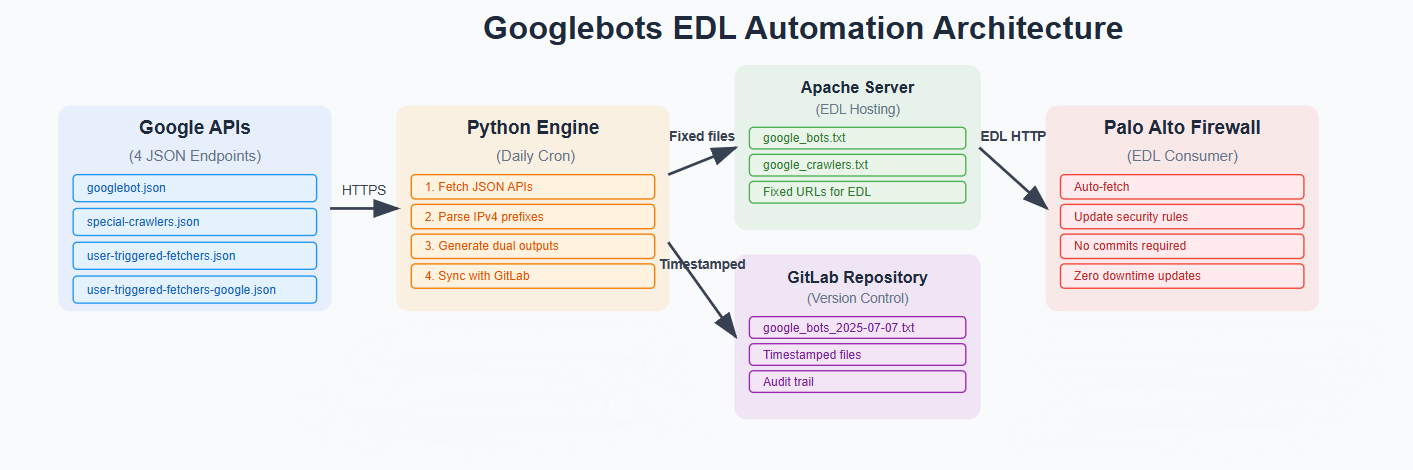

I implemented an automated system that transforms Google’s JSON APIs into firewall-consumable External Dynamic Lists (EDL).

Understanding External Dynamic Lists (EDL)

What are EDL? External Dynamic Lists are a native Palo Alto firewall feature that allows the firewall to automatically fetch IP lists from external web servers (HTTP/HTTPS) and use them dynamically in security rules without requiring manual commits. With EDL, you simply point the firewall to a URL (like http://yourserver.com/googlebot_ips.txt), and it automatically fetches and uses the latest IPs.

Phase 1: Core Automation Engine

System architecture showing data

System architecture showing data

Hybrid EDL Approach: Instead of direct API integration, I chose an hybrid solution combining automation with Palo Alto’s native EDL functionality:

- Python orchestrator fetches Google’s four JSON APIs daily

- Dual-output system generates both timestamped archives and fixed URLs

- Web server hosts the lists at static URLs for Palo Alto consumption

- EDL integration enables dynamic firewall updates without commits

- GitLab synchronization maintains version control and audit trails

Why this approach? he hybrid method offers several advantages over direct API integration: simplified implementation, reduced development overhead, and elimination of the need for change detection scripts to monitor IP list modifications.

Phase 2: Enterprise Security & Automation

The second phase focused on making this a production-ready solution with security and automation improvements:

- Secure credential management via environment variables only available for this project

- Automated secure logging with token masking and sensitive data protection

- Automated installation via three self-contained setup scripts

- Complete documentation with step-by-step guides and troubleshooting

- Error handling & monitoring with detailed diagnostics and recovery procedures

- Package isolation through Python virtual environments

- Permission management

🏗️ Technical Implementation Deep Dive

Architecture Overview

Data Flow & Processing

This what happens every day when automation runs:

# Daily workflow

1. Fetch Google JSON APIs (4 endpoints)

├── googlebot.json # Main search crawler IPs

├── special-crawlers.json # Specialized crawlers

├── user-triggered-fetchers.json # User-initiated fetches

└── user-triggered-fetchers-google.json # Google-specific fetches

2. Parse IPv4 prefixes from JSON responses using ip_utils.py

3. Generate dual-format outputs:

├── Web server: Fixed filenames (google_bots.txt)

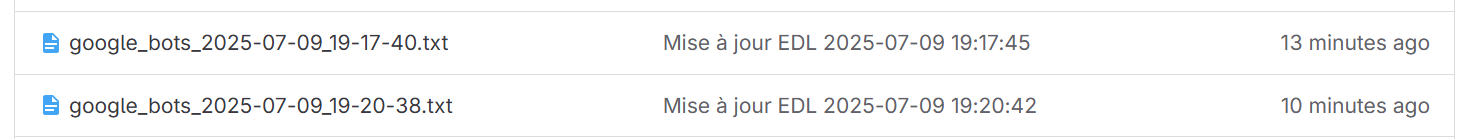

└── Git repo: Timestamped files (google_bots_2025-07-10_14-30-05.txt) for history

4. Sync with GitLab (pull → commit → push) for audit trail

Why dual outputs? Palo Alto EDL requires fixed URLs - the firewall is configured to check http://server/google_bots.txt and expects that URL to always work. But for audit and compliance, we also need historical tracking of what IPs were active when. So we generate both: fixed-name files for the firewall, and timestamped files for GitLab version control.

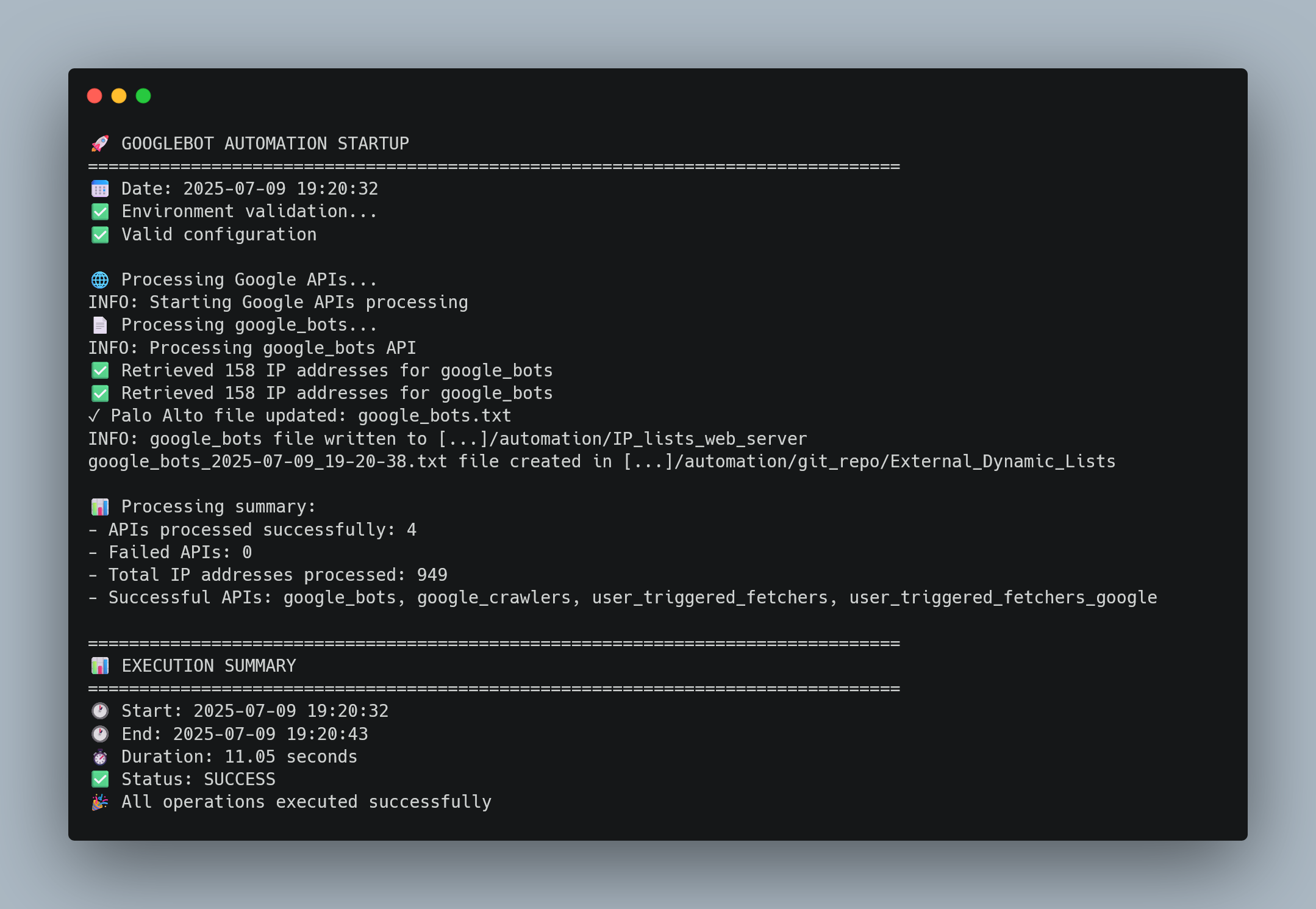

Automated Execution in Production:

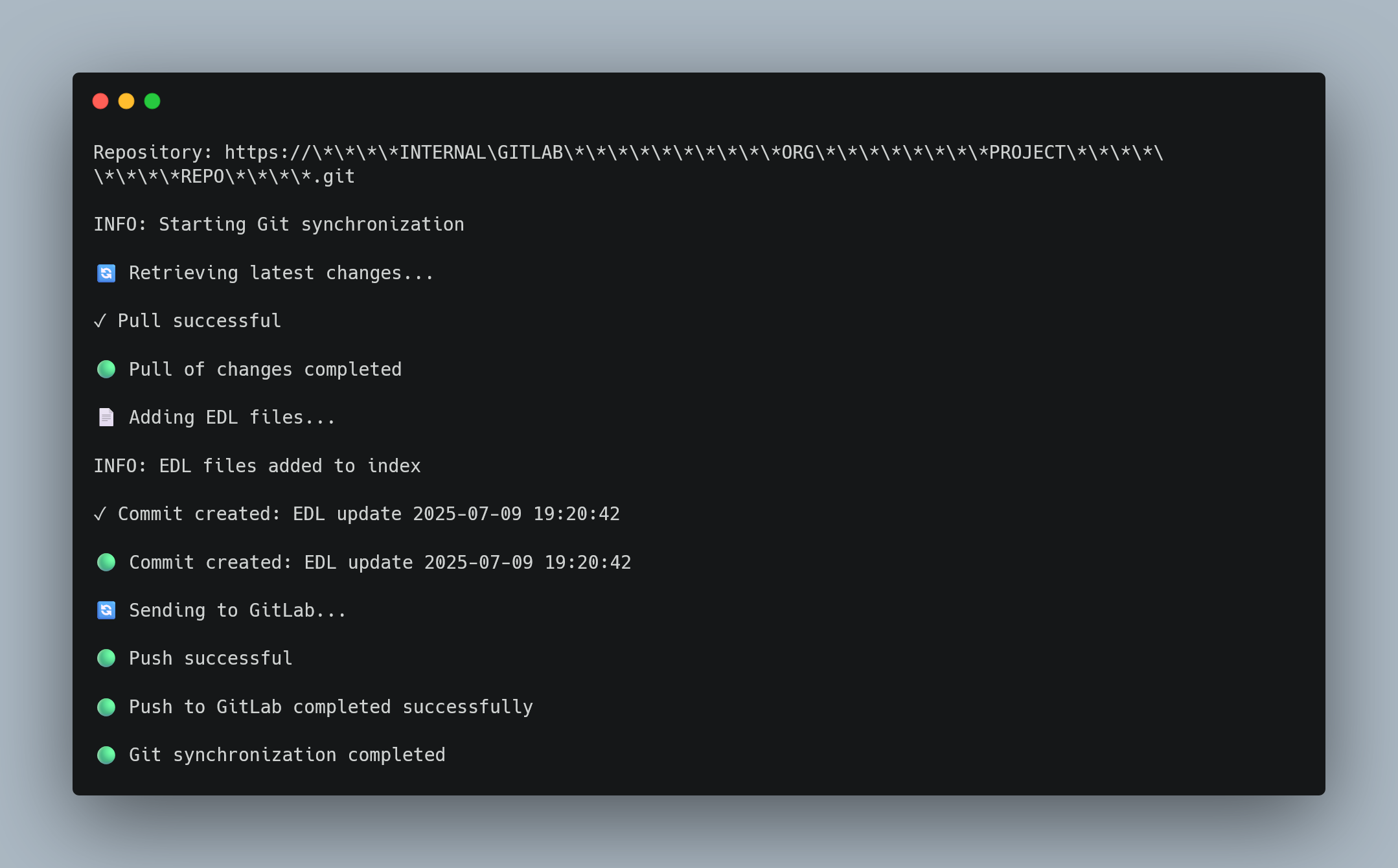

Daily automated execution showing successful API calls, dual file generation, and GitLab synchronization

Daily automated execution showing successful API calls, dual file generation, and GitLab synchronization

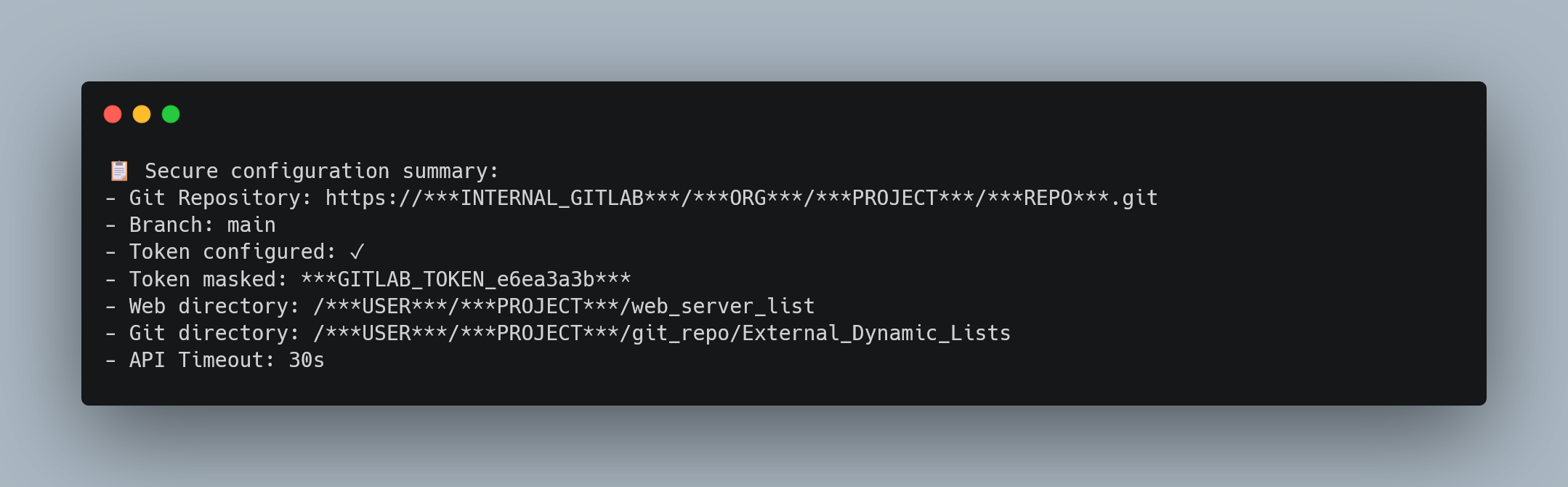

Secure Credential Management & Security System

Current Security Implementation: The system uses a comprehensive security model with environment variables and automated token masking:

# config.py - Secure credential loading

import os

from dotenv import load_dotenv

load_dotenv() # Loads .env file securely

GIT = {

"token": get_env_variable('GITLAB_TOKEN'),

"repo_url": get_env_variable('GITLAB_REPO_URL'),

"branch": get_env_variable('GITLAB_BRANCH', 'main', required=False)

}

Why environment variables approach:

- Credential isolation: Secrets stored in

.envfile with restricted permissions - Version control safety:

.envautomatically added to.gitignoreto prevent accidental commits - Validation and error handling: Automatic validation of required variables with clear error messages

- Cloud-native preparation: Direct compatibility with GitLab CI/CD protected variables for future migration

Token Masking Implementation: A comprehensive logging security system automatically masks sensitive data in all output:

# secure_logging.py - Automatic token masking

def mask_sensitive_data(message):

patterns = [

(r'glpat-[a-zA-Z0-9_-]{20,}', 'glpat-***MASKED***'),

(r'https://oauth2:[^@]+@', 'https://oauth2:***MASKED***@'),

]

for pattern, replacement in patterns:

message = re.sub(pattern, replacement, message)

return message

def safe_print(message):

masked_message = mask_sensitive_data(message)

print(masked_message)

Security benefits:

- Automatic protection: All print statements replaced with safe_print() for consistent masking

- Log file security: No exposed tokens in historical logs

- Error message safety: Git errors and exceptions automatically sanitized

- Multiple pattern detection: Supports various token formats and sensitive data types

Logs showing successful secure masking

Logs showing successful secure masking

Core Python Components

The core Python components are structured as follows:

Centralized Configuration Hub - config.py

This module stores all project settings (Google API URLs, file paths, GitLab credentials) in one place for easy maintenance:

# Secure environment variable loading with validation

def get_env_variable(var_name, default_value=None, required=True):

value = os.getenv(var_name, default_value)

if required and (value is None or value.strip() == ""):

raise ValueError(

f"Variable d'environnement requise manquante: {var_name}\n"

f"Vérifiez votre fichier .env et assurez-vous que {var_name} est défini."

)

return value

# Google APIs endpoints - easily updatable if Google changes URLs

URLS_IP_RANGES = {

"google_bots": "https://developers.google.com/search/apis/ipranges/googlebot.json",

"google_crawlers": "https://developers.google.com/search/apis/ipranges/special-crawlers.json",

"user_triggered_fetchers": "https://developers.google.com/search/apis/ipranges/user-triggered-fetchers.json",

"user_triggered_fetchers_google": "https://developers.google.com/search/apis/ipranges/user-triggered-fetchers-google.json"

}

# Dynamic path calculation based on project structure

SCRIPT_DIR = os.path.dirname(os.path.abspath(__file__))

BASE_DIR = os.path.dirname(SCRIPT_DIR)

PATHS = {

"web_server": os.path.join(BASE_DIR, "web_server_list"),

"git_repo": os.path.join(BASE_DIR, "git_repo", "External_Dynamic_List")

}

# Secure Git configuration with validation

GIT = {

"token": get_env_variable('GITLAB_TOKEN'),

"repo_url": get_env_variable('GITLAB_REPO_URL'),

"branch": get_env_variable('GITLAB_BRANCH', 'main', required=False),

"repo_path": os.path.join(BASE_DIR, "git_repo")

}

Why centralized configuration matters: Changes like adding a new Google API endpoint, updating GitLab credentials, or modifying paths only require editing one file. This prevents configuration drift and makes maintenance much easier.

The Orchestrator - main.py

This module coordinates the entire workflow: cloning GitLab repo, fetching Google APIs, processing IP lists, and pushing changes:

def main():

# First, ensure we have a local Git repository for version control

if not os.path.exists(PATHS['git_repo']):

print("No Git repository found, cloning from GitLab...")

clone_repository()

elif not os.path.isdir(os.path.join(PATHS['git_repo'], '.git')):

print("Directory exists but isn't a Git repo. Re-cloning...")

clone_repository()

else:

print("Git repository already exists and ready.")

# Process each of Google's four IP lists

for name, url in URLS_IP_RANGES.items():

print(f"Processing {name} from {url}")

json_data = curl_get_JSON_with_subprocess(url)

if json_data:

IP_list = parsing_json_data(json_data)

# Write to both web server (for EDL) and Git repo (for history)

for path in PATHS.values():

write_list_IP(IP_list, name, path)

# Finally, commit and push changes to GitLab

push_changes()

Google API Integration - ip_utils.py

This module handles communication with Google’s servers and converts JSON data into firewall-ready IP lists:

def curl_get_JSON_with_subprocess(url):

cmd = ["curl", "-v", "-L", "-k", url]

try:

result = subprocess.run(cmd, capture_output=True, text=True, check=True)

json_data = json.loads(result.stdout)

return json_data

except subprocess.CalledProcessError as e:

print(f"Curl failed (code {e.returncode}): {e.stderr}")

return None

except json.JSONDecodeError:

print("Response wasn't valid JSON - Google might have changed their API")

return None

def parsing_json_data(json_data):

"""

Extracts only IPv4 prefixes from Google's JSON response.

Google's JSON contains both IPv4 and IPv6, but our firewalls only need IPv4.

"""

if json_data:

ipv4_prefix_list = []

prefixes_data = json_data.get("prefixes", [])

for prefix in prefixes_data:

if "ipv4Prefix" in prefix:

# Add newline for proper file formatting

ipv4_prefix_list.append(prefix["ipv4Prefix"] + "\n")

return ipv4_prefix_list

else:

return []

def set_web_permissions(filepath):

"""Configure Webserver's permissions and ownsership."""

try:

# Permissions 644 : lecture/écriture pour le propriétaire, lecture seule pour groupe et autres

os.chmod(filepath, stat.S_IRUSR | stat.S_IWUSR | stat.S_IRGRP | stat.S_IROTH)

# Changer le propriétaire vers user:webserver pour que Webserver puisse lire

import pwd

import grp

current_user = os.getenv('USER', 'user')

try:

user_uid = pwd.getpwnam(current_user).pw_uid

webserver_gid = grp.getgrnam('webserver').gr_gid

os.chown(filepath, user_uid, webserver_gid)

except (KeyError, PermissionError):

print(f"⚠️ Impossible de changer le propriétaire de {filepath} (peut nécessiter sudo)")

return True

except Exception as e:

print(f"Avertissement: Impossible de configurer les permissions de {filepath}: {e}")

return False

Secure Git Operations - git_utils.py

This module manages GitLab synchronization for audit trails while protecting sensitive credentials:

def push_changes():

"""

Implements a safe Git workflow: pull first, then commit, then push.

This prevents conflicts if multiple systems were updating the repository.

"""

try:

repo = git.Repo(GIT['repo_path'])

update_remote_url(repo, GIT['token']) # Use OAuth2 token for auth

safe_print(f"Pulling latest changes from {GIT['branch']}...")

repo.remote(name='origin').pull(GIT['branch'])

# Add all .txt files in the EDL directory

repo.git.add('External_Dynamic_List/*.txt')

if repo.is_dirty():

# Create a meaningful commit message with timestamp

commit_message = f"Mise à jour EDL {datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')}"

repo.index.commit(commit_message)

# Push with explicit authentication

push_url = f"https://oauth2:{GIT['token']}@{GIT['repo_url'].split('https://')[1]}"

repo.git.push(push_url, GIT['branch'])

safe_print("✓ Changes pushed to GitLab successfully")

else:

safe_print("No changes detected - repository already up to date")

except Exception as e:

safe_print(f"Git operation failed: {e}")

# In production, this would trigger an alert

Web Server Configuration for EDL integration

Palo Alto’s External Dynamic Lists (EDL) functionality requires access to IP list files hosted on a web server and fetches these lists via HTTP requests such as http://server.domain.com/google_bots.txt.

File Serving Architecture

The system employs a symbolic link strategy to update webserver’s files. Python scripts generate files in [...]/automation/web_server_list/, while webserver serves content from ~/html-files/. A symbolic link bridges these directories:

This ensures that when our Python script updates the IP files, they’re immediately available via the web server without any file copying or additional steps.

# Create symbolic link for seamless file serving

ln -s [...]]/automation/web_server_list/ [...]/html-files/EDL_IP_lists

Permission Management: Webserver runs as the webserver user, but our Python scripts run as the user user. We need both to access the same files. This function enables the webserver to read files created by the Python automation scripts:

def set_web_permissions(filepath):

"""Configure les permissions et propriétaire pour webserver"""

try:

# Permissions 644 : lecture/écriture pour le propriétaire, lecture seule pour groupe et autres

os.chmod(filepath, stat.S_IRUSR | stat.S_IWUSR | stat.S_IRGRP | stat.S_IROTH)

# Changer le propriétaire vers user:webserver pour que Webserver puisse lire

import pwd

import grp

# Obtenir l'utilisateur actuel et le groupe webserver

current_user = os.getenv('USER', 'user')

try:

user_uid = pwd.getpwnam(current_user).pw_uid

webserver_gid = grp.getgrnam('webserver').gr_gid

# Changer le propriétaire

os.chown(filepath, user_uid, webserver_gid)

except (KeyError, PermissionError):

# Si impossible de changer le propriétaire, au moins avoir les bonnes permissions

print(f"⚠️ Impossible de changer le propriétaire de {filepath} (peut nécessiter sudo)")

return True

except Exception as e:

print(f"Avertissement: Impossible de configurer les permissions de {filepath}: {e}")

return False

Enterprise Installation System

One of the most important aspect is the comprehensive installation automation. I’ve created a three-script installation system that makes deployment completely reliable and secure.

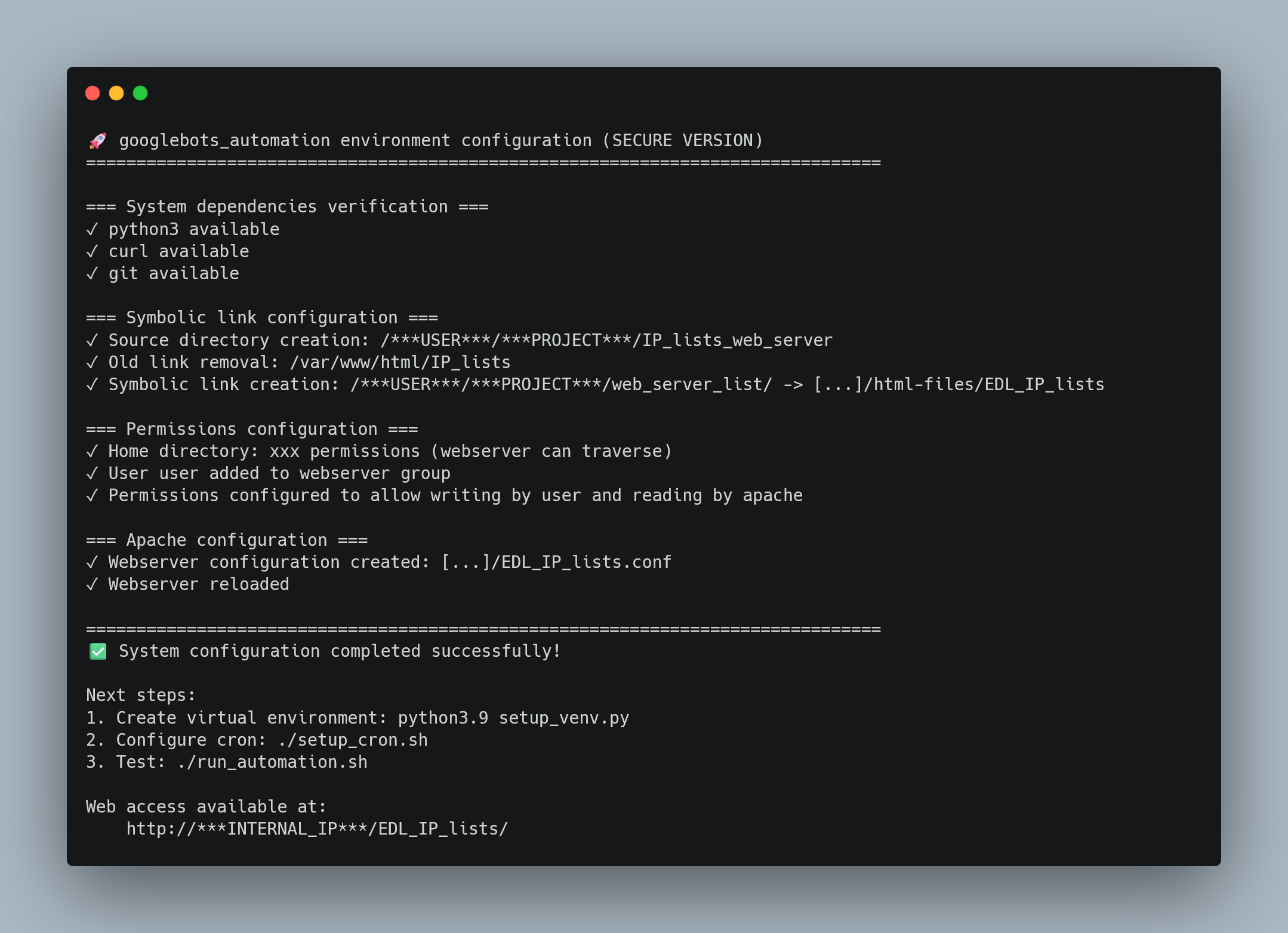

Script 1: setup.py - System Foundation

This script handles all the system-level configuration that requires root privileges:

# Key actions performed by setup.py:

1. Dependency Verification:

- Checks for python3, curl, git, webserver, etc.

- Verifies versions and installation paths

2. Directory Permissions (Critical for webserver):

- chmod xxx /home/user/ # webserver MUST be able to traverse the home directory

- chmod xxx project_directory/ # webserver needs access to project folder

3. Symbolic Link Creation:

- as explained

4. Webserver Configuration:

- Adds user to webserver group

- Creates config file for directory indexing

- Enables directory browsing for EDL files

5. Verification:

- Tests web access to ensure configuration works

- Provides troubleshooting commands if something fails

Script 2: setup_venv.py - Python Environment & Security

Why virtual environments? In enterprise environments, servers often have multiple applications with different Python dependencies. Virtual environments isolate our dependencies so they don’t conflict with other applications or system packages.

Security enhancements:

# Enhanced setup_venv.py creates:

1. Virtual Environment Creation:

- Creates googlebots_venv/ directory with isolated Python's package installation

- Uses python3 -m venv for maximum compatibility

2. Secure Credential Management:

- Creates .env file with placeholder values

- Sets proper permissions for security

- Automatically adds .env to .gitignore

- Provides clear instructions for token replacement

3. Enterprise-Compatible Dependencies:

- Installs specific versions compatible with internal repositories

- Creates requirements.txt with exact version pins

4. Execution Script Generation:

- Creates run_automation.sh with proper environment activation

- Handles logging and error redirection automatically

5. Security Validation:

- Tests that all imports work correctly

- Validates environment variable loading

- Confirms .env protection is in place

Script 3: setup_cron.sh - Automation Scheduling

The final piece is scheduling with proper backup and configuration:

#!/bin/bash

# setup_cron.sh - Automated cron configuration with safety

CRON_TIME="30 5 * * *" # Every day at 5:30 AM

PROJECT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

RUN_SCRIPT="$PROJECT_DIR/run_automation.sh"

# Backup existing crontab (safety first!)

echo "✓ Sauvegarde du crontab actuel"

crontab -l > "$PROJECT_DIR/crontab_backup_$(date +%Y%m%d_%H%M%S).txt" 2>/dev/null || true

# Create new crontab with our automation

(

# Keep existing cron jobs (filter out any old googlebots entries)

crontab -l 2>/dev/null | grep -v "automation" || true

# Add our new automation job

echo "# automation - Exécution quotidienne à 5h30"

echo "$CRON_TIME $RUN_SCRIPT"

) | crontab -

echo "✓ Cron configuré avec succès"

User Experience & Installation Design

One of the key focuses of this project was creating a clear user experience for installation and maintenance. The CLI interfaces are designed with visual feedback and step-by-step guidance.

CLI Output Design

Each setup script provides clear, visual feedback with:

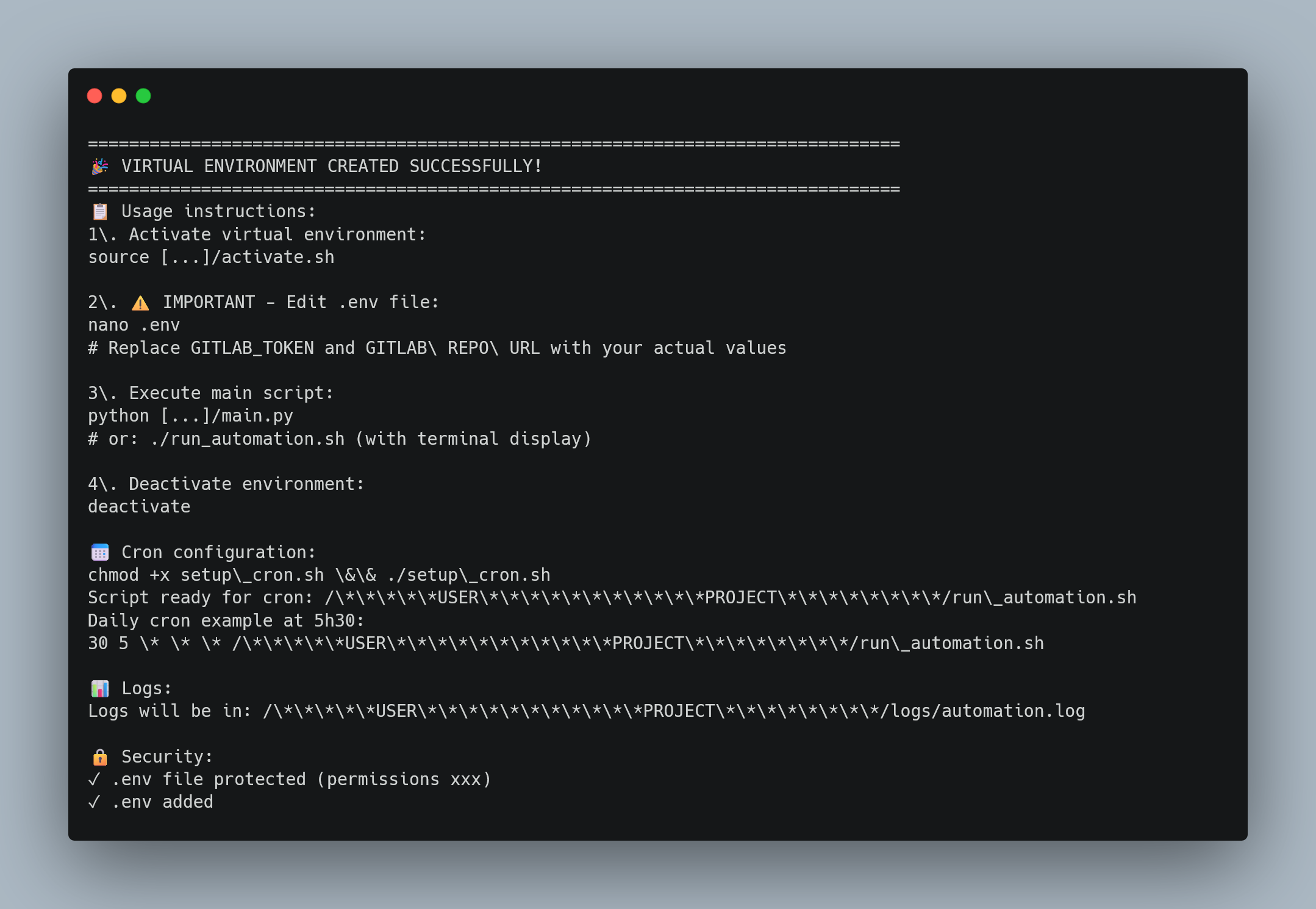

Example of setup_venv.py execution showing clear progress indicators and step-by-step feedback

Example of setup_venv.py execution showing clear progress indicators and step-by-step feedback

Installation Checklist & Clear Next Steps

Each script tells you exactly what to do next:

Comprehensive Documentation for Team Transfer

The project includes documentation designed for knowledge transfer and team maintenance:

README_INSTALLATION.md - Deployment Guide

- Step-by-step installation with visual CLI examples

- Common troubleshooting scenarios with specific solutions

- Permission diagnostics for webserver issues

- Network connectivity checks for proxy environments

- Verification procedures to confirm successful installation

README_SCRIPTS.md - Technical Architecture

- Detailed explanation of each module and its responsibilities

- Code examples showing key functions and their purposes

- Configuration management for different environments

- Debugging procedures for common operational issues

- Security token management with rotation procedures

Maintenance & Debugging Support

The documentation is specifically designed for scenarios like:

Network Connectivity Issues:

# Quick diagnosis for network issues

curl -v -I -L -k --connect-timeout 10 https://developers.google.com/search/apis/ipranges/googlebot.json

# Check webserver access on local machine

curl --noproxy "*" http://url-server/EDL_IP_lists/

# Verify GitLab connectivity

cd git_repo && git fetch origin

Permission Troubleshooting:

# Automated permission check

sudo python3 setup.py # Re-run system setup

# Manual permission verification

ls -la /home/user/dirpath/... # Check home directory and other dir for permissions

ls -la dirpath/web_server_list/ # Check project permissions

groups user # Verify webserver group membership

Log Analysis:

# Check recent execution

tail -f logs/automation.log

# Look for errors in dedicated error log

tail -f logs/automation_error.log

# GitLab sync issues

grep "Git operation failed" logs/automation.log

Knowledge Transfer Checklist

The documentation includes complete explanations and maintenance checklists:

- Verify GitLab token validity

- Check log file sizes and rotation

- Test web access to EDL files

- Validate Google API connectivity

- Review webserver configuration

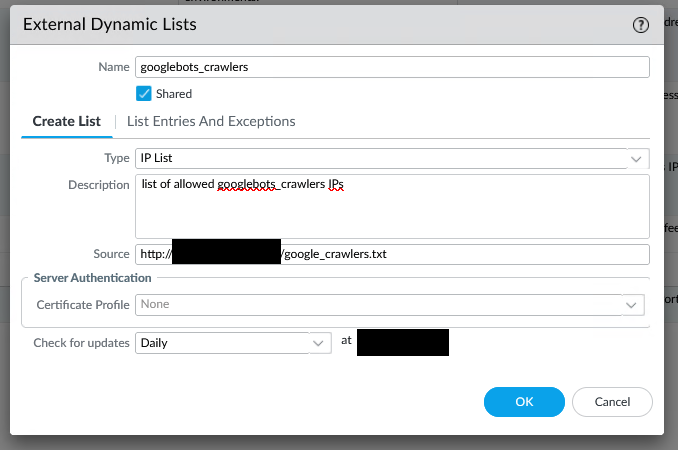

Palo Alto EDL Configuration

In the Palo Alto firewall (via Panorama), we configure four EDL objects:

EDL object configuration in Palo Alto Panorama (Panorama = group management solutions for Palo firewalls)

EDL object configuration in Palo Alto Panorama (Panorama = group management solutions for Palo firewalls)

Security Note: The system supports both HTTP and HTTPS for EDL communication.

Once configured, these EDL can be used directly in security rules.

The beauty is that no commits are required to push the configuration on the equipment, it is dynamic. When our automation updates, the Palo Alto firewall automatically downloads the new list a moment later and updates its allowed IP list in security rules.

GitLab Integration & Version Control

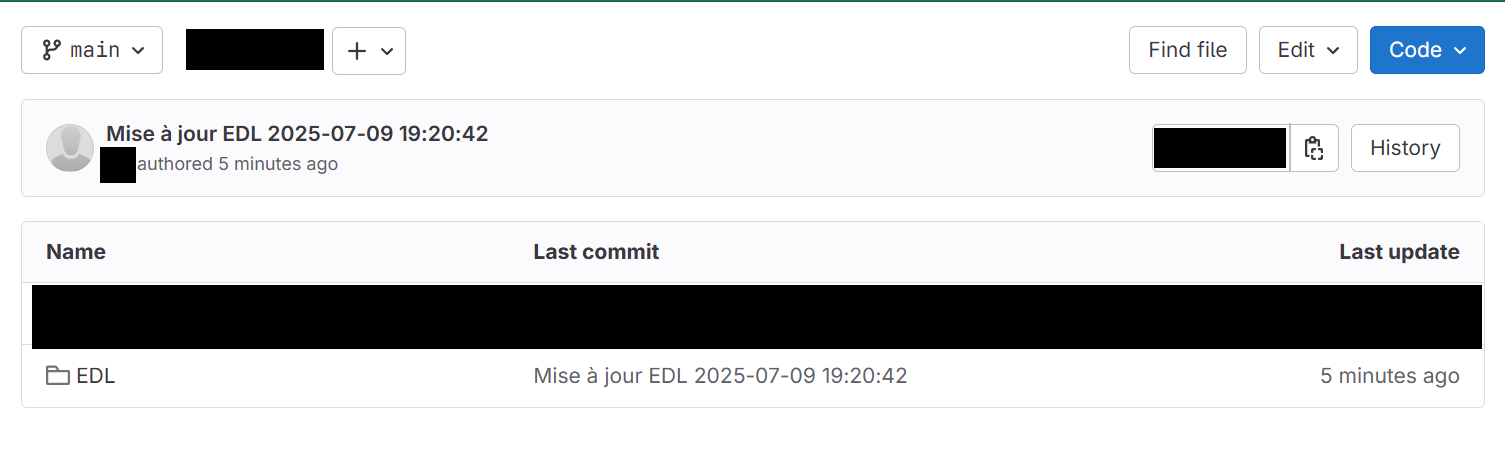

The system maintains complete audit trails through GitLab integration, with automated commits showing the daily updates:

Automated daily commits showing regular updates with timestamps

Automated daily commits showing regular updates with timestamps

Version Control Benefits:

- Complete history of all IP list changes with timestamps

- Rollback capability if issues arise with new Google IP ranges

- Audit compliance with timestamped records of all automated changes

- Team collaboration enabling multiple administrators to track changes

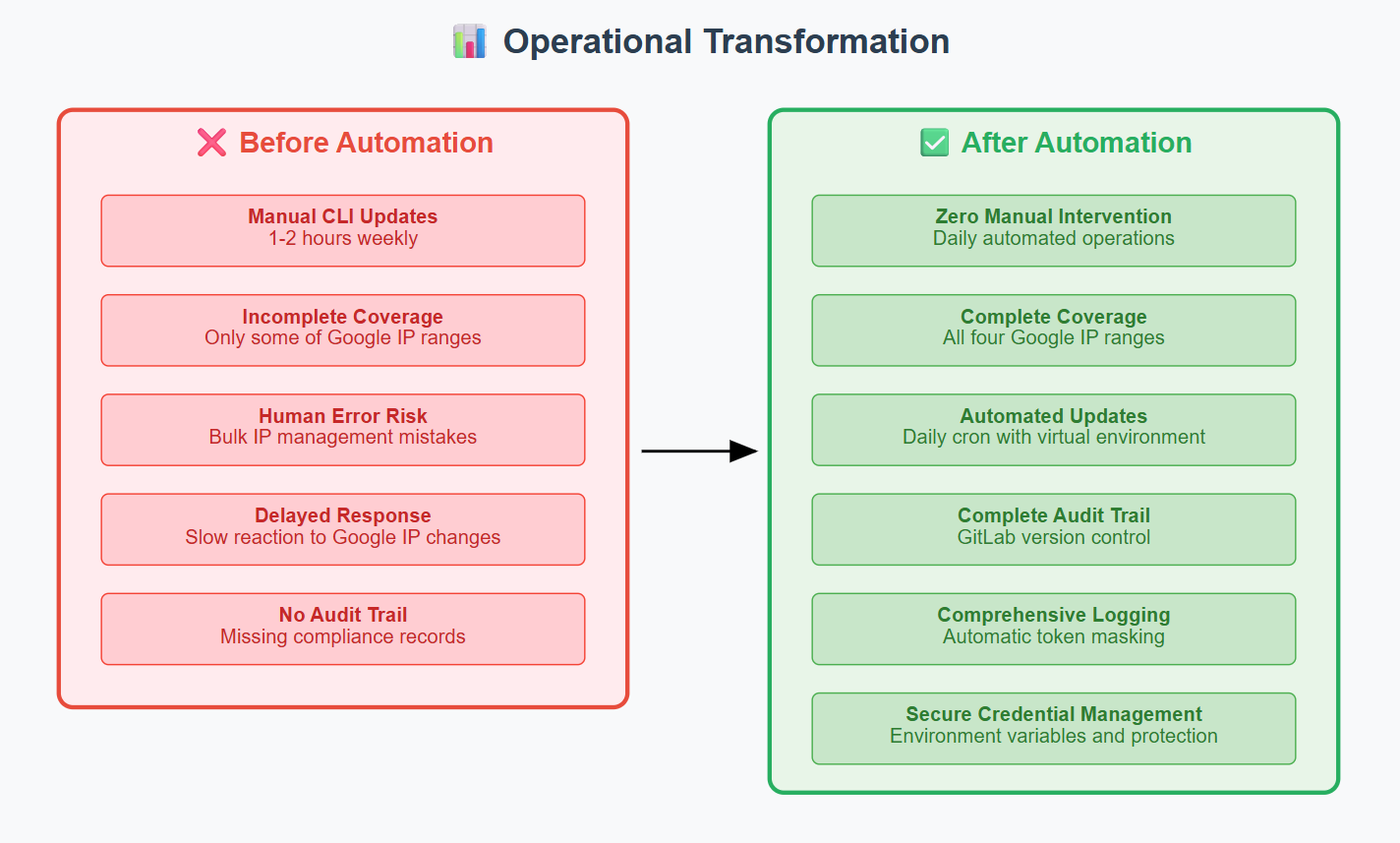

📊 Production Results & Business Impact

Operational Transformation

Business Value & Revenue Protection

Advertising Revenue Stability:

- Protected Google Ads revenue through proper crawler access ensuring accurate visibility tracking

- Preserved ad inventory value by maintaining optimal site visibility metrics

- Marketing campaign effectiveness sustained through reliable search engine indexing

Enterprise Readiness:

- Security compliance with corporate standards for credential management

- Deployment automation enabling rapid rollout to other environments

- Documentation standards meeting enterprise requirements for knowledge management

- Monitoring capabilities providing operational visibility and troubleshooting support

🔮 Future Enhancements & Cloud-Native Evolution

Planned Technical Improvements

Direct Palo Alto API Integration: While the EDL approach is rock-solid, I’ve completed preliminary testing for direct API integration that would eliminate the web server requirement entirely. However, the Palo Alto REST API calls are more complex than the EDL approach which remains more practical for this use case.

Multi-Vendor Support: The Python automation architecture and API integration, combined with secure credential management, would make it straightforward to extend support to other firewall vendors (FortiGate, Cisco ASA, etc.).

Evolution to Cloud-Native Architecture

The current implementation, while fully functional and production-ready, has identified opportunities for architectural evolution toward modern cloud-native practices.

Planned Enhancements and New Learnings:

- Containerization with Docker/Podman for improved portability and scalability

- GitLab CI/CD integration with GitLab Runner deployment and comprehensive YAML pipeline definitions replacing cron-based scheduling

- Centralized secret management exploring enterprise solutions like HashiCorp Vault

- Advanced monitoring with centralized logging and alerting using Prometheus/Grafana observability stack

Migration Approach: The evolution will follow a phased migration strategy to ensure business continuity, transitioning from the current VM-based approach to a containerized microservices architecture.

Note: This cloud-native transformation will be detailed in a dedicated upcoming project focused on modern DevSecOps architecture patterns.

🎓 AI-Assisted Development Lessons Learned

This project demonstrated both the acceleration benefits and risks of AI-assisted development in production environments.

Key Learning:

• Over-Engineering Control - AI systems tend to create comprehensive but overly complex solutions. Future projects require upfront scope definition and complexity assessment to balance robustness with maintainability. • Universal portability design - Design decisions should consider from the outset whether solutions should be enterprise-specific or architected for broader reusability, enabling community sharing and deployment beyond organizational boundaries.

🏆 Project Impact & Professional Growth

End-to-end automation project demonstrating DevSecOps practices from business problem identification to production deployment with operational procedures and security standards.

Key Achievements:

- Production automation with zero-maintenance operation and secure credential management

- Enterprise integration with existing security infrastructure and audit compliance

- Scalable architecture ready for cloud-native evolution

- End-to-end project management from requirements analysis through deployment and operational support

Professional Development:

- AI-assisted development collaboration for complex automation solutions

- Enterprise Linux administration with complex security policies and deployment

- Production troubleshooting (EDL loading issues, API access problems, system debugging)

- Security-first architecture design with credential protection and audit compliance

- Documentation as code enabling team scalability and knowledge transfer